Installation instructions

| These pages are intended for customers who want to self-host the Timefold Platform. Please make sure you understand the impact of self-hosting the Timefold Platform and that you have the correct license file in order to proceed. Contact us for details. |

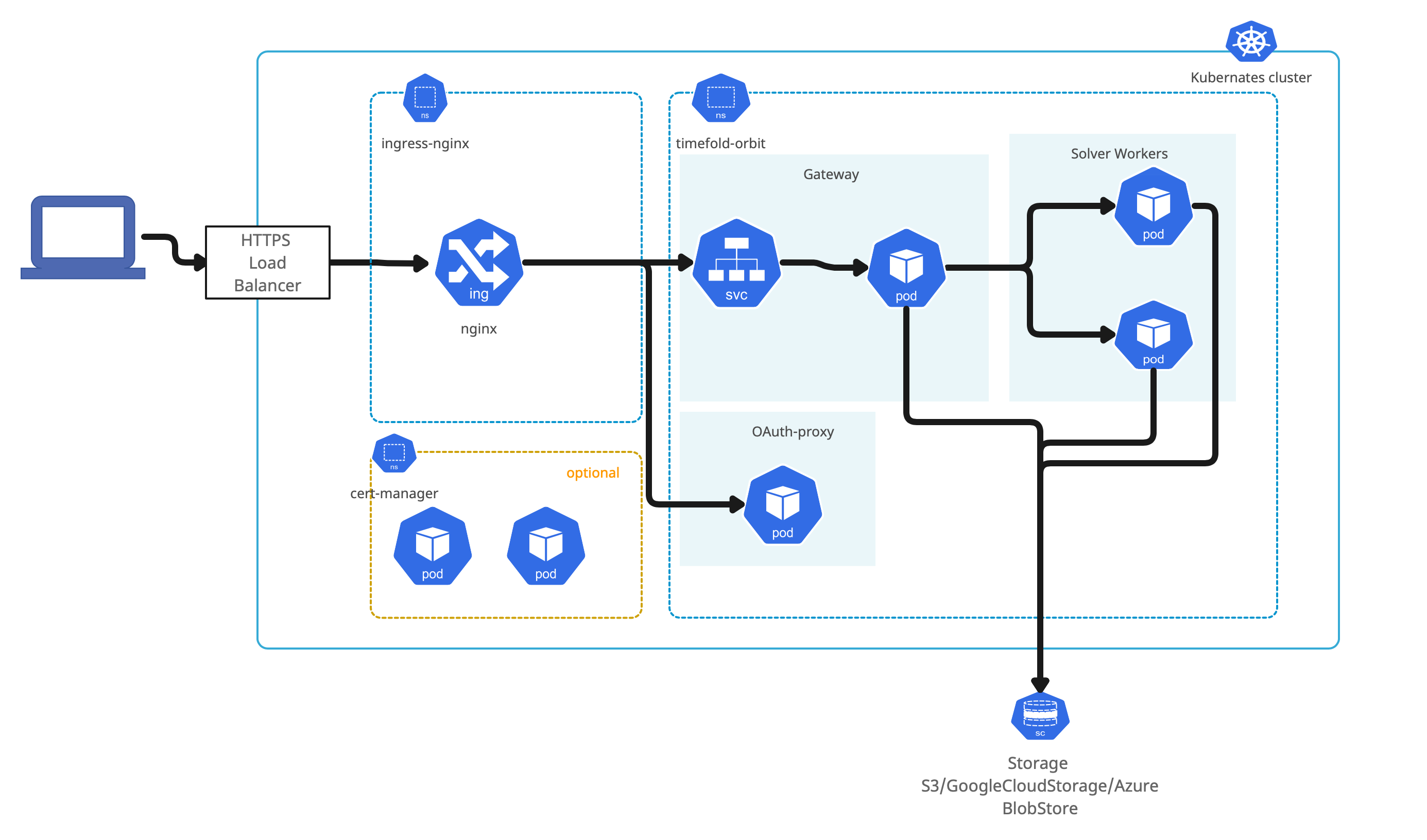

Kubernetes is the target environment for the platform. It takes advantage of dynamicity of the cluster and at the same time its components are not specific to any of the Kubernetes distribution.

Timefold Platform has been certified on following Kubernetes based offerings:

-

Amazon Elastic Kubernetes Service (EKS)

-

Google Kubernetes Engine (GKE)

-

Azure Kubernetes Service (AKS)

-

Red Hat OpenShift

| Timefold Platform is expected to work on any Kubernetes service, either in public cloud or on premise. |

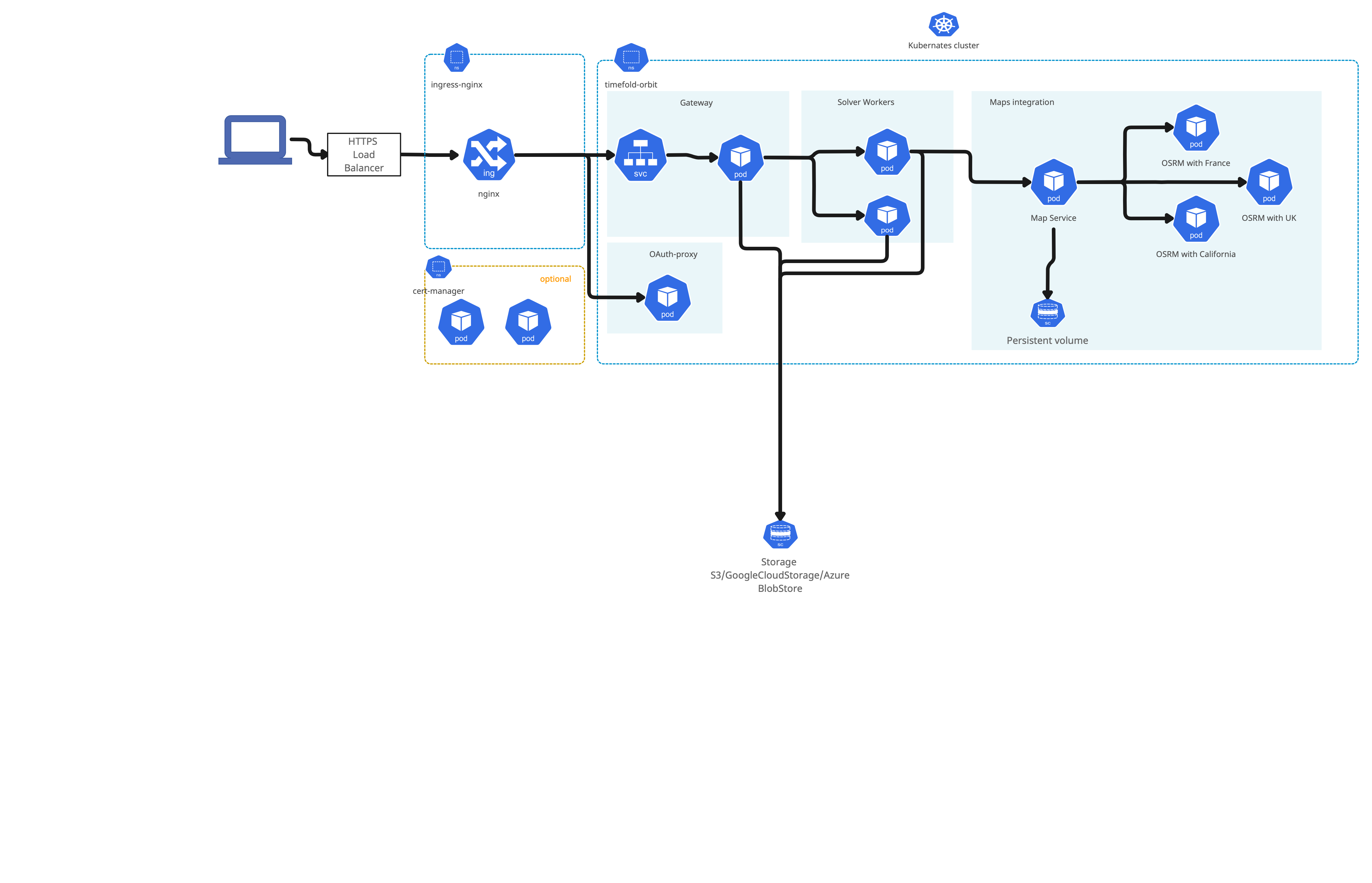

In case of Timefold Platform with maps integration deployment architecture is as follows

Before you begin

You need to fulfill several prerequisites before proceeding with installation.

-

Kubernetes cluster

-

DNS name with TLS certificate for Timefold Platform

-

Open ID Connect application

-

Remote data store

-

Required tools installed

Kubernetes

Kubernetes cluster can be either hosted by one of certified cloud providers (see above) or on prem installation.

Amazon EKS Kubernetes cluster installation

Follow these steps to configure basic setup for AWS EKS to have running Kubernetes cluster in AWS

Azure Kubernetes cluster installation

Follow these steps to configure basic setup for Azure AKS to have running Kubernetes cluster in Azure

Google Kubernetes cluster installation

Follow these steps to configure basic setup for GKE to have running Kubernetes cluster in Google Cloud Platform

| Currently, AutoPilot version of Google Kubernetes Engine cannot be used with cert manager. When using autopilot, you need to provide TLS certificate manually. |

Your actions

-

Create Kubernetes cluster

DNS name and certificates

The Timefold Platform is accessible via web interface, this web interface requires a dedicated DNS name and TLS certificates for it.

DNS name should be dedicated to the Timefold Platform e.g. timefold.organization.com.

TLS certificate can be

-

provided as pair of

-

certificate

-

private key used to sing the certificate

-

-

automatically managed certificate life cycle by using

cert-managerand Let’s Encrypt Certificate Authority.

Your actions

-

Create DNS name within your DNS provider

-

If not using cert manager create certificate and private key

OpenID Connect configuration

Security of the Timefold Platform is based on OpenID Connect. This allows to integrate with any OpenID Connect provider such as Google, Azure, Okta and others.

Installation requires following information from an OpenID Connect provider configuration

-

client id (aka application id)

-

client secret

In addition, following information are required that usually come from OpenID Connect configuration encpoint (.well-known/openid-configuration)

-

issuer url - represented as

issuerin OpenID Connect configuration -

certificates url - represented as

jwks_uriin OpenID Connect configuration

|

OIDC configuration endpoints examples: |

Create an application in selected Open ID Connect provider. As a reference, look at Google and Azure client application setup.

Your actions

-

Create Client application in your OpenID Connect provider

-

Make a note of

-

client id

-

client secret

-

OIDC configuration requires valid OIDC setup as it initiates the connection to OIDC provider at start and without it Timefold Platform won’t work. If you don’t have proper configuration of OIDC, disable OIDC via values file during Platform installation.

oauth:

enabled: falseRemote data store

The Timefold Platform requires remote data store to persists data sets during execution. Following are supported data stores

-

Amazon S3

-

Google Cloud Storage

-

Azure BlobStore

| Timefold Platform manages data store by creating and removing both objects and their containers/buckets thus the access must be granted for these operations. |

Your actions

-

Create data store in one of the supported options

-

Obtain access key to the created data store

-

service account key for Google Cloud Storage

-

connection string for Azure BlobStore

-

access key and access secret for Amazon S3

-

Installation

Installation of the Timefold Platform consists of three parts

-

Infrastructure installation

-

Security configuration and installation

-

Timefold Platform deployment

Infrastructure installation

| When using Red Hat OpenShift this step can be omitted. |

-

Ingress

Core infrastructure component is an ingress that is based on Nginx and acts like an entry point from outside of the Kubernetes cluster. Installation is based on official documentation.

If Kubernetes cluster has already Nginx Ingress this step can be omitted. The easiest way to install Nginx ingress is via Helm. Issue following command to install ingress in dedicated

ingress-nginxnamespace:helm upgrade --install ingress-nginx ingress-nginx \ --repo https://kubernetes.github.io/ingress-nginx \ --namespace ingress-nginx --create-namespace \ --set controller.allowSnippetAnnotations=trueThe above command is the most basic configuration of the ingress and it is recommended to consult the official documenation when installing to given Kubernetes cluster especially when running on cloud provider’s infrastructure. -

Asking ingress external IP to dedicated DNS name

Regardless if the ingress was just installed, or it was already present, the external IP of the ingress needs to be assigned to the DNS name for the Timefold Platform

Issue following command to obtain external IP of the ingress

kubectl get service -n ingress-nginxthe result of the command should be as follows

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller LoadBalancer 10.0.79.218 20.31.236.247 80:30723/TCP,443:32612/TCP 5d20h ingress-nginx-controller-admission ClusterIP 10.0.214.62 <none> 443/TCP 5d20hMake a note of the external ip of the

ingress-nginx-controller. This IP needs to be added as DNS record (A or CNAME) within your DNS provider.

| Verify that host name is properly linked to the ingress external IP and ingress is responding before proceeding with further installation steps |

| At this point, when you access the external IP it should return 404. |

Security configuration and installation

Configuration of security aspect of the platform covers TLS certificate that will be used to secure traffic coming into the platform.

TLS certificate can be provided manually or can be fully managed using cert manager.

| In case certificate is provided manually, the administrator of the platform is responsible for renewal process of the certificate. |

Provide certificate and private key manually

If certificate and private key is provided manually it needs to be set as base64 encoded content in values file (details about values file are described in Timefold Platform deployment section).

In addition, cert manager needs to be disabled (ingress.tls.certManager.enabled set to false)

ingress:

tls:

certManager:

enabled: false

cert: |

-----BEGIN CERTIFICATE-----

MIIFAjCCA+qgAwIBAgISA47J5bfwCEBxdp/Npea0B/isMA0GCSqGSIb3DQEBCwUA

....

-----END CERTIFICATE-----

key: |

-----BEGIN RSA PRIVATE KEY-----

MIIEowIBAAKCAQEAvMx3Yui4OovRQeMnqVHaxmaDSD+hFezqq/mfz2xI6L0dlLfO

....

-----END RSA PRIVATE KEY-----Cert Manager

| If you provide certificate and private key this step can be omitted. |

Cert manager is responsible for providing trusted certificates for ingress. It takes care of the complete life cycle management for TLS certificate for Timefold Platform. By default when cert manager is enabled, Timefold Platform will use Let’s Encrypt CA to automatically request and provision TLS certificate for the DNS name.

Installation is based on official documentation.

| If Kubernetes cluster has already Cert Manager this step can be ommited. |

The easiest way to install cert-manger is via Helm. Issue following commands to install it in dedicated cert-manager namespace:

helm repo add jetstack https://charts.jetstack.io

helm install cert-manager jetstack/cert-manager \

--namespace cert-manager \

--version v1.13.2 \

--create-namespace \

--set installCRDs=trueAt this point, the cert-manager should be installed. When running the following command

kubectl get service --namespace cert-manager cert-manager

the result of the command should be as follows

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cert-manager ClusterIP 10.0.254.7 <none> 9402/TCP 105sOnce cert manager is installed, deployment of Timefold Platform will create Let’s Encrypt based issuer automatically. If there is a need to use another issuer, follow cert manager guide on creating issuer.

End-to-end encryption

Timefold Platform has number of components that interact with each other over network. By default it is not secured (HTTP) but end to end encryption (HTTPS) can be turned on.

When using cert manager the only required configuration is to enable it in the values file

ingress:

tls:

e2e: trueAll required certificates and configuration will be provisioned automatically including rotation of certificates on regular basis - certificates are set to 90 days before expiration.

In case cert manager is not used, the certificates must be provided manually via kubernetes secret with following names inside the same namespace as Timefold platform is deployed to.

-

timefold-platform-components-tls

-

timefold-platform-workers-tls

timefold-platform-components-tls is required to have a wildcard certificate for .namespace.svc where namespace should be replaced with the actual namespace where Timefold platform is deployed

timefold-platform-workers-tls is required to have a wildcard certificate for .namespace.pod where namespace should be replaced with the actual namespace where Timefold platform is deployed

For example, when Timefold platform is deployed to planning-platform namespace then the certificates should be for following DNS names .planning-platform.svc and .planning-platform.pod respectively

Both of these secrets should have following keys

Data

====

tls.crt:

tls.key:

ca.crt:Once the certificates and secrets are created, end to end tls can be enabled for platform deployment.

Prepare Kubernetes cluster

Kubernetes cluster runs workloads on worker nodes. These worker nodes are actually machines attached to the cluster that have certain characteristics such as CPU type and architecture, available memory and so on. Timefold Platform workload is compute intense and runs best on compute optimized nodes.

Type of nodes is very dependent on where Kubernetes cluster is running and what type of hardware is available.

By default, Timefold Platform runs on any nodes available in the cluster so no additional configuration is required but it is recommended to dedicate compute optimized node in the cluster for running solver workloads.

List all available nodes in you cluster with following command:

kubectl get nodesMark selected worker nodes as solver workers

Use following command to mark selected nodes as dedicated for running solver workloads

kubectl taint nodes NAME_OF_THE_NODE ai.timefold/solver-worker=true:NoSchedule

kubectl label nodes NAME_OF_THE_NODE ai.timefold/solver-worker=true| The commands should be executed on every node that should be dedicated to run solver workloads |

After marking nodes as solver workers only solving pods will be able to run on these nodes.

| Ensure that there are some nodes available that are not dedicated to solver workloads to allow to execute any other type of workloads. |

Dedicate selected worker nodes to given model

If Timefold Platform is configured with multiple models, there is an option to dedicate selected nodes for given models by the model id.

Use following command to mark selected nodes as dedicated for running employee-scheduling model only

kubectl taint nodes NAME_OF_THE_NODE ai.timefold/model=employee-scheduling:NoSchedule

kubectl label nodes NAME_OF_THE_NODE ai.timefold/model=employee-scheduling| The commands should be executed on every node that should be dedicated to run solver workloads |

Dedicate selected worker nodes to given tenant

When Timefold Platform runs in multi tenant setup, there is also an option to dedicate selected nodes to given tenant.

Use following command to mark selected nodes as dedicated for running 668798f6-2026-4cce-9098-e212730b060e tenant (given as id) only

kubectl taint nodes NAME_OF_THE_NODE ai.timefold/tenant=668798f6-2026-4cce-9098-e212730b060e:NoSchedule

kubectl label nodes NAME_OF_THE_NODE ai.timefold/tenant=668798f6-2026-4cce-9098-e212730b060e| The commands should be executed on every node that should be dedicated to run solver workloads |

Dedicate selected worker nodes to given tenant group

When Timefold Platform runs in multi tenant setup, there is also an option to dedicate selected nodes to given tenant group. This is more flexible extension of dedicated to single tenant as it allows to reuse node pool for many tenants e.g. single account.

Use following command to mark selected nodes as dedicated for running my-company tenant group only

kubectl taint nodes NAME_OF_THE_NODE ai.timefold/tenant-group=my-company:NoSchedule

kubectl label nodes NAME_OF_THE_NODE ai.timefold/tenant-group=my-company| The commands should be executed on every node that should be dedicated to run solver workloads |

Next assign selected tenants to my-company group. This can be done via admin api at tenant creation or by updating existing tenant.

Adding additional tolerations to solver worker pods

Timefold platform dynamically creates pods to perform solving operations. In certain situations, Kubernetes nodes can be configured to include additional taints thus pods created require tolerations for these taints.

In such situations, during installation of the platform you can set them via following configuration in values file.

models:

tolerations: kubernetes.io/arch:Equal:arm64:NoScheduleTolerations must be specified in following format: key:operator:value:effect

example: kubernetes.io/arch:Equal:arm64:NoSchedule

Multiple tolerations can be specified by using | as separator. Example: kubernetes.io/arch:Equal:arm64:NoSchedule|kubernetes.io/test:Equal:test:NoSchedule

|

Timefold Platform deployment

Timefold support provides an access token for the container registry. This access token is used to log in to the Helm chart repository to pull container images (via image pull secret) and is then registered in the Kubernetes cluster and is used during installation and by the running components.

| When the access token expires, you will receive a new token from Timefold support. When this happens, regenerate the token value and replace the environment variable with the following steps. |

Generate token value to access container registry

Use following command to create a base64 encoded string that will act as container registry token used in the installation of the Timefold Platform.

kubectl create secret docker-registry timefold-ghcr --docker-server=ghcr.io --docker-username=sa --docker-password={YOUR_TIMEFOLD_TOKEN} --dry-run=client --output="jsonpath={.data.\.dockerconfigjson}"replace YOUR_TIMEFOLD_TOKEN with the token received from Timefold support

Once installation is completed, you can access the plaform on dedicated DNS name.

Once the token is created, set it as environment variable TOKEN.

export TOKEN=VALUE_FROM_PREVIOUS_STEPThe Timefold Platform deployment differs in terms of configuration options depending on remote data store selected.

Amazon S3 based installation

-

Save following template as

orbit-values.yaml

#####

##### Installation values for Amazon Web Services (EKS) based environment

#####

# number of active replicas of the main component of the platform

#replicaCount: 1

license: YOUR_ORBIT_LICENSE

ingress:

host: YOUR_HOST_NAME

tls:

# select one of the below options

# 1) in case cert manager is not available or cannot be used, provide certificate and private key

#cert:

#key:

# 2) if cert manager is installed and lets encrypt can be used

certManager:

enabled: true

issuer: "letsencrypt-prod"

acmeEmail: YOUR_ADMINISTRATOR_EMAIL_ADDRESS

oauth:

certs: YOUR_OIDC_PROVIDER_CERTS_URL

issuer: YOUR_OIDC_PROVIDER_ISSUER_URL

emailDomain: YOUR_ORG_EMAIL_DOMAIN

clientId: YOUR_CLIENT_ID

# Mode in which auth operatates - expected values: idToken or accessToken

# mode: idToken

# generated cookie secret with following command

# dd if=/dev/urandom bs=32 count=1 2>/dev/null | base64 | tr -d -- '\n' | tr -- '+/' '-_'; echo

cookieSecret: YOUR_COOKIE_SECRET

secrets:

data:

awsAccessKey: YOUR_AWS_ACCESS_KEY

awsAccessSecret: YOUR_AWS_ACCESS_SECRET

# administrators of the platform

admins: |

# [email protected]

storage:

remote:

# specify custom name for configuration bucket, can be company name with timefold-configuration suffix or domain name

name: YOUR_CUSTOM_NAME_FOR_CONFIG_BUCKET

# specify retention of data in the platform expressed in days, data will be automatically removed

#expiration: 7

type: s3

#s3:

# region: us-east-1

# Add a prefix to all created buckets (must be smaller than 28 characters)

#bucketPrefix:

#models:

# maximum amount of time solver will spend before termination

# terminationSpentLimit: PT30M

# maximum allowed limit for user-supplied termination spentLimit. Safety net to prevent long-running jobs

# terminationMaximumSpentLimit: PT60M

# if the score has not improved during this period, terminate the solver

# terminationUnimprovedSpentLimit: PT5M

# maximum allowed limit for user-supplied termination unimprovedSpentLimit. Safety net to prevent long-running jobs

# terminationMaximumUnimprovedSpentLimit: PT5M

# duration to keep the runtime after solving has finished

# runtimeTimeToLive: PT1M

# duration to keep the runtime without solving since last request

# idleRuntimeTimeToLive: PT30M

# IMPORTANT: when setting resources, all must be set

# resources:

# limits:

# max CPU allowed for the platform to be used

# cpu: 1

# max memory allowed for the platform to be used

# memory: 512Mi

# requests:

# guaranteed CPU for the platform to be used

# cpu: 1

# guaranteed memory for the platform to be used

# memory: 512Mi

#maps:

# enabled: true

# number of active replicas of the maps service - when set to more than 1, maps.scalable property must be set to true

# replicaCount: 1

# cache:

# TTL: P7D

# cleaningInterval: PT1H

# persistentVolume:

# storageClassName: "gp2"

# accessMode: "ReadWriteOnce"

# size: "8Gi"

# osrm:

# options: "--max-table-size 10000"

# maxDistanceFromRoad: 10000

# locations:

# - region: us-southern-california

# maxLocationsInRequest: 10000

# transportType: car

# - region: us-georgia

# maxLocationsInRequest: 10000

# externalLocationsUrl:

# customModel: "http://localhost:5000"

# retry:

# maxDurationMinutes: 60

# autoscaling:

# enabled: true

# minReplicas: 1

# maxReplicas: 2

# cpuAverageUtilization: 110

# externalProvider: "http://localhost:5000"

#insights:

# number of active replicas of the insights service

# replicaCount: 1

#solver:

# maxThreadCount: 8 # Set the maximum thread count for each job in the platform

# Set notifications timeout

#notifications:

# webhook:

# readTimeout: PT5S # Default is 5 seconds

# connectTimeout: PT5S # Default is 5 secondsThe above file serves as template for S3 based deployment of Timefold Platform.

Replace all parameters that start with YOUR_ with the corresponding values

-

YOUR_HOST_NAME- DNS name dedicated to Timefold Platform -

YOUR_OIDC_PROVIDER_CERTS_URLOIDC certificates URL from OIDC provider -

YOUR_OIDC_PROVIDER_ISSUER_URLOIDC issuer URL from OIDC provider, it is also used as prefix of the OIDC discovery endpoint -

YOUR_ORG_EMAIL_DOMAINdomain of the email address that are allowed to access the platform, remove this parameter or set to*to allow any email domains -

YOUR_CLIENT_IDapplication client id from OIDC provider -

YOUR_COOKIE_SECRETgenerated secret to encrypt cookies - see command next to the property in the template -

YOUR_CUSTOM_NAME_FOR_CONFIG_BUCKETspecify unique name for the bucket that configuration should be stored in. S3 bucket names must be globally unique so ideal is to use company name or domain name as bucket name.

OIDC can be used in two modes idToken or accessToken. By default idToken is used and it is recommended when there is authorization proxy system in front apis as it brings performance improvements as ID token is always JWT token with all information included. If the Timefold platform is used directly it is recommended to use accessToken mode to let the API retrieve user info behind the token via OIDC UserInfo endpoint as part of the verification process.

|

when using cert manager:

- YOUR_ADMINISTRATOR_EMAIL_ADDRESS - email address or functional mail box to be notified about certificate issues managed by cert manager

when not using cert manager:

- set ingress.tls.certManager.enabled to false

- remove other settings about cert manager (under ingress.tls.certManager)

- uncomment and set values for ingress.tls.cert and ingress.tls.key

Optionally platform administrators can be set by setting email addresses of the users as a list of admins property

Platform components can be deployed in high availability mode (multiple replicas of the service). Number of replicas is controlled by following properties:

# number of active replicas of the main component of the platform

replicaCount: 1

# number of active replicas of the insights service

insights:

replicaCount: 1

# number of active replicas of the maps service - when set to more than 1, maps.scalable property must be set to true

maps:

replicaCount: 1This file can be safely stored as it does not contain any sensitive information. Such information are provided as part of installation command from environment variables.

Login to Timefold Helm Chart repository to gain access to Timefold Platform chart.

Use user as username and token provided by Timefold support as password.

helm registry login ghcr.io/timefoldaiInstallation command requires following environment variables to be set:

-

NAMESPACE - Kubernetes namespace where platform should be installed

-

OAUTH_CLIENT_SECRET - client secret of the application used for this environment

-

TOKEN - GitHub access token to container registry provided by Timefold support

-

LICENSE - Timefold Platform license string provided by Timefold support

-

AWS_ACCESS_KEY - AWS access key

-

AWS_ACCESS_SECRET - AWS access secret

Once successfully logged in with environment variables set, issue installation command via helm:

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE" \

--set secrets.data.awsAccessKey="$AWS_ACCESS_KEY" \

--set secrets.data.awsAccessSecret="$AWS_ACCESS_SECRET"Timefold Platform also supports authentication to AWS services (S3) via AWS IAM Roles for Service Accounts (IRSA) that allows to avoid using AWS access key and secret.

Configuration should be based on official documentation how to configure IRSA in EKS. The ARN of the role to use can be set in values file.

storage:

remote:

name: my-company-timefold-configuration

type: s3

s3:

region: eu-central-1

role: "arn:aws:iam::ACCOUNTID:role/ROLE_NAME"In case of using IRSA helm install command would not need to set access key and access secret

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE"

Above command will install (or upgrade) latest released version of the Timefold Platform.

To install specific version append --version A.B.C to the command where A.B.C is the version number to be used.

|

Once installation is completed, you can access the platform on dedicated DNS name. Information how to access will be displayed at the end of installation.

Google Cloud Storage based installation

-

Save following template as

orbit-values.yaml

#####

##### Installation values for Google Cloud Platform (GKE) based environment

#####

# number of active replicas of the main component of the platform

#replicaCount: 1

license: YOUR_ORBIT_LICENSE

ingress:

host: YOUR_HOST_NAME

tls:

# select one of the below options

# 1) in case cert manager is not available or cannot be used, provide certificate and private key

#cert:

#key:

# 2) if cert manager is installed and lets encrypt can be used

certManager:

enabled: true

issuer: "letsencrypt-prod"

acmeEmail: YOUR_ADMINISTRATOR_EMAIL_ADDRESS

oauth:

certs: YOUR_OIDC_PROVIDER_CERTS_URL

issuer: YOUR_OIDC_PROVIDER_ISSUER_URL

emailDomain: YOUR_ORG_EMAIL_DOMAIN

clientId: YOUR_CLIENT_ID

# Mode in which auth operatates - expected values: idToken or accessToken

# mode: idToken

# generated cookie secret with following command

# dd if=/dev/urandom bs=32 count=1 2>/dev/null | base64 | tr -d -- '\n' | tr -- '+/' '-_'; echo

cookieSecret: YOUR_COOKIE_SECRET

secrets:

stringData:

serviceAccountKey: YOUR_GOOGLE_STORAGE_SERVICE_ACCOUNT_KEY

projectId: YOUR_GOOGLE_CLOUD_PROJECT_ID

# administrators of the platform

admins: |

# [email protected]

storage:

remote:

# specify custom name for configuration bucket, can be google project id with timefold-configuration suffix or domain name

name: YOUR_CUSTOM_NAME_FOR_CONFIG_BUCKET

# specify retention of data in the platform expressed in days, data will be automatically removed

#expiration: 7

type: googlecloud

# Add a prefix to all created buckets (must be smaller than 28 characters)

#bucketPrefix:

#models:

# maximum amount of time solver will spend before termination

# terminationSpentLimit: PT30M

# maximum allowed limit for user-supplied termination spentLimit. Safety net to prevent long-running jobs

# terminationMaximumSpentLimit: PT60M

# if the score has not improved during this period, terminate the solver

# terminationUnimprovedSpentLimit: PT5M

# maximum allowed limit for user-supplied termination unimprovedSpentLimit. Safety net to prevent long-running jobs

# terminationMaximumUnimprovedSpentLimit: PT5M

# duration to keep the runtime after solving has finished

# runtimeTimeToLive: PT1M

# duration to keep the runtime without solving since last request

# idleRuntimeTimeToLive: PT30M

# IMPORTANT: when setting resources, all must be set

# resources:

# limits:

# max CPU allowed for the platform to be used

# cpu: 1

# max memory allowed for the platform to be used

# memory: 512Mi

# requests:

# guaranteed CPU for the platform to be used

# cpu: 1

# guaranteed memory for the platform to be used

# memory: 512Mi

#maps:

# enabled: true

# number of active replicas of the maps service - when set to more than 1, maps.scalable property must be set to true

# replicaCount: 1

# cache:

# TTL: P7D

# cleaningInterval: PT1H

# persistentVolume:

# storageClassName: "standard"

# accessMode: "ReadWriteOnce"

# size: "8Gi"

# osrm:

# options: "--max-table-size 10000"

# maxDistanceFromRoad: 10000

# locations:

# - region: us-southern-california

# maxLocationsInRequest: 10000

# transportType: car

# - region: us-georgia

# maxLocationsInRequest: 10000

# externalLocationsUrl:

# customModel: "http://localhost:5000"

# retry:

# maxDurationMinutes: 60

# autoscaling:

# enabled: true

# minReplicas: 1

# maxReplicas: 2

# cpuAverageUtilization: 110

# externalProvider: "http://localhost:5000"

#insights:

# number of active replicas of the insights service

# replicaCount: 1

#solver:

# maxThreadCount: 8 # Set the maximum thread count for each job in the platform

# Set notifications timeout

#notifications:

# webhook:

# readTimeout: PT5S # Default is 5 seconds

# connectTimeout: PT5S # Default is 5 secondsThe above file serves as template for Google Cloud Storage based deployment of Timefold Platform.

Replace all parameters that start with YOUR_ with the corresponding values

-

YOUR_HOST_NAME- DNS name dedicated to Timefold orbit -

YOUR_OIDC_PROVIDER_CERTS_URLOIDC certificates URL from OIDC provider -

YOUR_OIDC_PROVIDER_ISSUER_URLOIDC issuer URL from OIDC provider, it is also used as prefix of the OIDC discovery endpoint -

YOUR_ORG_EMAIL_DOMAINdomain of the email address that are allowed to access the platform, remove this parameter or set to*to allow any email domains -

YOUR_CLIENT_IDapplication client id from OIDC provider -

YOUR_COOKIE_SECRETgenerated secret to encrypt cookies - see command next to the property in the template -

YOUR_CUSTOM_NAME_FOR_CONFIG_BUCKETspecify unique name for the bucket that configuration should be stored in. Google Cloud Storage bucket names must be globally unique so ideal is to use company name or domain name as bucket name.

OIDC can be used in two modes idToken or accessToken. By default idToken is used and it is recommended when there is authorization proxy system in front apis as it brings performance improvements as ID token is always JWT token with all information included. If the Timefold platform is used directly it is recommended to use accessToken mode to let the API retrieve user info behind the token via OIDC UserInfo endpoint as part of the verification process.

|

when using cert manager:

- YOUR_ADMINISTRATOR_EMAIL_ADDRESS - email address or functional mail box to be notified about certificate issues managed by cert manager

when not using cert manager:

- set ingress.tls.certManager.enabled to false

- remove other settings about cert manager (under ingress.tls.certManager)

- uncomment and set values for ingress.tls.cert and ingress.tls.key

Optionally platform administrators can be set by setting email addresses of the users as a list of admins property

Platform components can be deployed in high availability mode (multiple replicas of the service). Number of replicas is controlled by following properties:

# number of active replicas of the main component of the platform

replicaCount: 1

# number of active replicas of the insights service

insights:

replicaCount: 1

# number of active replicas of the maps service - when set to more than 1, maps.scalable property must be set to true

maps:

replicaCount: 1This file can be safely stored as it does not contain any sensitive information. Such information are provided as part of installation command from environment variables.

Login to Timefold Helm Chart repository to gain access to Timefold Platform chart.

Use user as username and token provided by Timefold support as password.

helm registry login ghcr.io/timefoldaiInstallation command requires following environment variables to be set:

-

NAMESPACE - Kubernetes namespace where platform should be installed

-

OAUTH_CLIENT_SECRET - client secret of the application used for this environment

-

TOKEN - GitHub access token to container registry provided by Timefold support

-

LICENSE - Timefold Platform license string provided by Timefold support

-

GCS_SERVICE_ACCOUNT - Service Account key for Google Cloud Storage

-

GCP_PROJECT_ID - Google Cloud Project id where storage is created

Once successfully logged in with environment variables set, issue installation command via helm:

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE" \

--set secrets.stringData.serviceAccountKey="$GCS_SERVICE_ACCOUNT" \

--set secrets.stringData.projectId="$GCP_PROJECT_ID"Timefold Platform also supports authentication to GCP services (Google Cloud Storage) via Google Cloud Workload Identity Federation that allows to avoid using long lived service account keys.

Configuration should be based on official documentation how to configure Workload Identity in GKE. The service account to use can be set in values file.

storage:

remote:

name: my-company-timefold-configuration

type: gcs

gcs:

region: europe-west-1

serviceAccount: [GSA_NAME]@[PROJECT_ID].iam.gserviceaccount.comIn case of using Workload Identity helm install command would not need to set service account

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE" \

--set secrets.stringData.projectId="$GCP_PROJECT_ID"

Above command will install (or upgrade) latest released version of the Timefold Platform.

To install specific version append --version A.B.C to the command where A.B.C is the version number to be used.

|

Once installation is completed, you can access the platform on dedicated DNS name.

Azure BlobStore based installation

-

Save following template as

orbit-values.yaml

#####

##### Installation values for Azure (AKS) based environment

#####

# number of active replicas of the main component of the platform

#replicaCount: 1

license: YOUR_ORBIT_LICENSE

ingress:

host: YOUR_HOST_NAME

tls:

# select one of the below options

# 1) in case cert manager is not available or cannot be used, provide certificate and private key

#cert:

#key:

# 2) if cert manager is installed and lets encrypt can be used

certManager:

enabled: true

issuer: "letsencrypt-prod"

acmeEmail: YOUR_ADMINISTRATOR_EMAIL_ADDRESS

oauth:

certs: YOUR_OIDC_PROVIDER_CERTS_URL

issuer: YOUR_OIDC_PROVIDER_ISSUER_URL

emailDomain: YOUR_ORG_EMAIL_DOMAIN

clientId: YOUR_CLIENT_ID

# Mode in which auth operatates - expected values: idToken or accessToken

# mode: idToken

# generated cookie secret with following command

# dd if=/dev/urandom bs=32 count=1 2>/dev/null | base64 | tr -d -- '\n' | tr -- '+/' '-_'; echo

cookieSecret: YOUR_COOKIE_SECRET

secrets:

data:

azureStoreConnectionString: YOUR_AZURE_BLOB_STORE_CONNECTION_STRING

# administrators of the platform

admins: |

# [email protected]

storage:

remote:

# specify custom name for configuration bucket, can be company name with timefold-configuration suffix or domain name

name: YOUR_CUSTOM_NAME_FOR_CONFIG_BUCKET

type: azure

# Add a prefix to all created buckets (must be smaller than 28 characters)

#bucketPrefix:

#models:

# maximum amount of time solver will spend before termination

# terminationSpentLimit: PT30M

# maximum allowed limit for user-supplied termination spentLimit. Safety net to prevent long-running jobs

# terminationMaximumSpentLimit: PT60M

# if the score has not improved during this period, terminate the solver

# terminationUnimprovedSpentLimit: PT5M

# maximum allowed limit for user-supplied termination unimprovedSpentLimit. Safety net to prevent long-running jobs

# terminationMaximumUnimprovedSpentLimit: PT5M

# duration to keep the runtime after solving has finished

# runtimeTimeToLive: PT1M

# duration to keep the runtime without solving since last request

# idleRuntimeTimeToLive: PT30M

# IMPORTANT: when setting resources, all must be set

# resources:

# limits:

# max CPU allowed for the platform to be used

# cpu: 1

# max memory allowed for the platform to be used

# memory: 512Mi

# requests:

# guaranteed CPU for the platform to be used

# cpu: 1

# guaranteed memory for the platform to be used

# memory: 512Mi

#maps:

# enabled: true

# number of active replicas of the maps service - when set to more than 1, maps.scalable property must be set to true

# replicaCount: 1

# cache:

# TTL: P7D

# cleaningInterval: PT1H

# persistentVolume:

# storageClassName: "Standard_LRS"

# accessMode: "ReadWriteOnce"

# size: "8Gi"

# osrm:

# options: "--max-table-size 10000"

# maxDistanceFromRoad: 10000

# locations:

# - region: us-southern-california

# maxLocationsInRequest: 10000

# transportType: car

# - region: us-georgia

# maxLocationsInRequest: 10000

# externalLocationsUrl:

# customModel: "http://localhost:5000"

# retry:

# maxDurationMinutes: 60

# autoscaling:

# enabled: true

# minReplicas: 1

# maxReplicas: 2

# cpuAverageUtilization: 110

# externalProvider: "http://localhost:5000"

#insights:

# number of active replicas of the insights service

# replicaCount: 1

#solver:

# maxThreadCount: 8 # Set the maximum thread count for each job in the platform

# Set notifications timeout

#notifications:

# webhook:

# readTimeout: PT5S # Default is 5 seconds

# connectTimeout: PT5S # Default is 5 secondsThe above file serves as template for Azure BlobStore based deployment of Timefold Platform.

Replace all parameters that start with YOUR_ with the corresponding values

-

YOUR_HOST_NAME- DNS name dedicated to Timefold orbit -

YOUR_OIDC_PROVIDER_CERTS_URLOIDC certificates URL from OIDC provider -

YOUR_OIDC_PROVIDER_ISSUER_URLOIDC issuer URL from OIDC provider, it is also used as prefix of the OIDC discovery endpoint -

YOUR_ORG_EMAIL_DOMAINdomain of the email address that are allowed to access the platform, remove this parameter or set to*to allow any email domains -

YOUR_CLIENT_IDapplication client id from OIDC provider -

YOUR_COOKIE_SECRETgenerated secret to encrypt cookies - see command next to the property in the template -

YOUR_CUSTOM_NAME_FOR_CONFIG_BUCKETspecify unique name for the container within storage account that configuration should be stored in. Azure containers names does not have to be globally unique but it is recommended to use company name or domain name as container name.

OIDC can be used in two modes idToken or accessToken. By default idToken is used and it is recommended when there is authorization proxy system in front apis as it brings performance improvements as ID token is always JWT token with all information included. If the Timefold platform is used directly it is recommended to use accessToken mode to let the API retrieve user info behind the token via OIDC UserInfo endpoint as part of the verification process.

|

when using cert manager:

- YOUR_ADMINISTRATOR_EMAIL_ADDRESS - email address or functional mail box to be notified about certificate issues managed by cert manager

when not using cert manager:

- set ingress.tls.certManager.enabled to false

- remove other settings about cert manager (under ingress.tls.certManager)

- uncomment and set values for ingress.tls.cert and ingress.tls.key

Optionally platform administrators can be set by setting email addresses of the users as a list of admins property

Platform components can be deployed in high availability mode (multiple replicas of the service). Number of replicas is controlled by following properties:

# number of active replicas of the main component of the platform

replicaCount: 1

# number of active replicas of the insights service

insights:

replicaCount: 1

# number of active replicas of the maps service - when set to more than 1, maps.scalable property must be set to true

maps:

replicaCount: 1This file can be safely stored as it does not contain any sensitive information. Such information are provided as part of installation command from environment variables.

Login to Timefold Helm Chart repository to gain access to Timefold Platform chart.

Use user as username and token provided by Timefold support as password.

helm registry login ghcr.io/timefoldaiInstallation command requires following environment variables to be set:

-

NAMESPACE - Kubernetes namespace where platform should be installed

-

OAUTH_CLIENT_SECRET - client secret of the application used for this environment

-

TOKEN - GitHub access token to container registry provided by Timefold support

-

LICENSE - Timefold Platform license string provided by Timefold support

-

AZ_CONNECTION_STRING - Azure BlobStore connection string

Once successfully logged in with environment variables set, issue installation command via helm:

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE" \

--set secrets.data.azureStoreConnectionString="$AZ_CONNECTION_STRING"Timefold Platform also supports authentication to Azure services (Azure BlobStore) via Azure Workload Identity that allows to avoid using long lived connection strings.

Configuration should be based on official documentation how to configure Workload Identity in AKS. The client id to use can be set in values file.

storage:

remote:

name: my-company-timefold-configuration

type: azure

azure:

clientId: client id to use Azure Workload IdentityIn case of using Workload Identity helm install command would not need to set Azure connection string

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE"

Above command will install (or upgrade) latest released version of the Timefold Platform.

To install specific version append --version A.B.C to the command where A.B.C is the version number to be used.

|

Once installation is completed, you can access the platform on dedicated DNS name.

Red Hat OpenShift based installation

| First follow selected (Amazon S3, Google Cloud Storage or Azure BlobStore) storage installation. |

-

Edit

orbit-values.yaml

1.1 Disable the ingress component but configure the host according to your OpenShift networking setup. Ingress is disabled as OpenShift comes with another networking component that acts as ingress.

ingress:

enabled: false

host: YOUR_OPENSHIFT_HOST1.2 Optional: Simplify OAuth configuration when full OAuth cannot be configured

oauth:

enabled: false

# certs:

# issuer:1.3 Configure the pod security context to specify user and group IDs that the pods will run as

podSecurityContext:

runAsUser: 1007510000

fsGroup: 1007510000

osrmPodSecurityContext:

runAsUser: 1007510000Complete values file will look like this

#####

##### Installation values for orbit-dev.timefold.dev

##### Running in Azure

ingress:

enabled: false

host: YOUR_OPENSHIFT_HOST

oauth:

enabled: false

# certs:

# issuer:

podSecurityContext:

runAsUser: 1007510000

fsGroup: 1007510000

osrmPodSecurityContext:

runAsUser: 1007510000

secrets:

data:

awsAccessKey: YOUR_AWS_ACCESS_KEY

awsAccessSecret: YOUR_AWS_ACCESS_SECRET

# administrators of the platform

admins: |

# [email protected]

storage:

remote:

# specify custom name for configuration bucket, can be company name with timefold-configuration suffix or domain name

name: YOUR_CUSTOM_NAME_FOR_CONFIG_BUCKET

# specify retention of data in the platform expressed in days, data will be automatically removed

#expiration: 7

type: s3

#s3:

# region: us-east-1

# Add a prefix to all created buckets (must be smaller than 28 characters)

#bucketPrefix:

#models:

# maximum amount of time solver will spend before termination

# terminationSpentLimit: PT30M

# maximum allowed limit for user-supplied termination spentLimit. Safety net to prevent long-running jobs

# terminationMaximumSpentLimit: PT60M

# if the score has not improved during this period, terminate the solver

# terminationUnimprovedSpentLimit: PT5M

# maximum allowed limit for user-supplied termination unimprovedSpentLimit. Safety net to prevent long-running jobs

# terminationMaximumUnimprovedSpentLimit: PT5M

# duration to keep the runtime after solving has finished

# runtimeTimeToLive: PT1M

# duration to keep the runtime without solving since last request

# idleRuntimeTimeToLive: PT30M

# IMPORTANT: when setting resources, all must be set

# resources:

# limits:

# max CPU allowed for the platform to be used

# cpu: 1

# max memory allowed for the platform to be used

# memory: 512Mi

# requests:

# guaranteed CPU for the platform to be used

# cpu: 1

# guaranteed memory for the platform to be used

# memory: 512Mi

#maps:

# enabled: true

# number of active replicas of the maps service - when set to more than 1, maps.scalable property must be set to true

# replicaCount: 1

# cache:

# TTL: P7D

# cleaningInterval: PT1H

# persistentVolume:

# storageClassName: "gp3"

# accessMode: "ReadWriteOnce"

# size: "8Gi"

# osrm:

# options: "--max-table-size 10000"

# maxDistanceFromRoad: 10000

# locations:

# - region: us-southern-california

# maxLocationsInRequest: 10000

# - region: us-georgia

# maxLocationsInRequest: 10000

# transportType: car

# externalLocationsUrl:

# customModel: "http://localhost:5000"

# retry:

# maxDurationMinutes: 60

# autoscaling:

# enabled: true

# minReplicas: 1

# maxReplicas: 2

# cpuAverageUtilization: 110

# externalProvider: "http://localhost:5000"

#insights:

# number of active replicas of the insights service

# replicaCount: 1

#solver:

# maxThreadCount: 8 # Set the maximum thread count for each job in the platform

# Set notifications timeout

#notifications:

# webhook:

# readTimeout: PT5S # Default is 5 seconds

# connectTimeout: PT5S # Default is 5 secondsAfter the additional changes to orbit-values.yaml file have been completed, follow the installation steps in the selected storage section.

| OpenShift Route created automatically at installation might not be properly configured according to defined policies, it’s recommended to review the route and recreate it manually when needed. |

Deploy maps integration

The Timefold Platform provides integration with maps to provide more accurate travel time and distance calculation. This map component is deployed as part of the Timefold Platform but must be explicitly turned on and maps regions specified.

Check the maps service documentation to understand the capabilities of the maps component.

Enable maps in your orbit-values.yaml file with following sections

maps:

enabled: true

scalable: true

cache:

TTL: P7D

cleaningInterval: PT1H

persistentVolume: # Optional

accessMode: "ReadWriteOnce"

size: "8Gi"

#storageClassName: "standard" #Specific to each provider storage

osrm:

options: "--max-table-size 10000"

# maxDistanceFromRoad: 10000 # Maximum distance from road in meters before OSRM returns no route. Defaults to 1000.

locations:

- region: osrm-britain-and-ireland

# maxLocationsInRequest: 10000 # Maximum number of accepted locations for OSRM requests. Defaults to 10000.

# transportType: car # Optional, defaults to car.

# resources:

# requests:

# memory: "1000M" # Optional, defaults to the idle memory of the specific image.

# cpu: "1000m" # Optional, defaults to 1000m.

# limits:

# memory: "8000M" # Optional, defaults to empty.

# cpu: "5000m" # Optional, defaults to empty.

# externalLocationsUrl:

# customLocation1: "http://localhost:8080" # External OSRM instance for location; locations should not contain any spaces

# customLocation2: "http://localhost:8081"

# retry:

# maxDurationMinutes: 60 # Set timeout of retry requests to OSRM in minutes. Defaults to 60.

# autoscaling:

# enabled: true # Enables OSRM instance autoscaling. This works by scaling the map for each location independently. Defaults to false.

# minReplicas: 1 # Minimum number of OSRM instance replicas. Defaults to 1.

# maxReplicas: 2 # Maximum number of OSRM instance replicas. Defaults to 2.

# cpuAverageUtilization: 110 # Memory cpu utilization to scale OSRM instance. It can be fine-tuned based on expected usage of OSRM maps. Defaults to 110.Next, configure the map to be used for the model with following configuration

models:

mapService:

fieldServiceRouting: osrm-britain-and-irelandAbove configuration provisions the complete set of components to use map as source for distance and travel time for solving. Below is a detailed description of some configurations used by the maps.

Persistent Volume

If persistentVolume is not set, it will be used the storage from each node for the maps cache. In that case, the cache will be cleared when the pod restarts. It’s possible to set the storageClassName for the specific storage of each provider (e.g. "standard" for GCP, "gp2" for AWS and "Standard_LRS" for Azure).

Autoscaling

If OSRM autoscaling is enabled, it’s also needed to have the Metrics Server in you cluster. On some deployments it’s installed by default. If it’s not already installed, you can install it with the following command (more information in the helm chart):

helm repo add bitnami https://charts.bitnami.com/bitnami &&

helm repo update &&

helm upgrade --install --atomic --timeout 120s metrics-server bitnami/metrics-server --namespace "metrics-server" --create-namespaceThe OSRM autoscaling works by scaling the map for each location independently. It will aim for an average utilization of each instance of 70% of the memory.

External OSRM instances

If you want to use your OSRM instance, you can configure the platform to access the instance for a specific location with the externalLocationsUrl key.

Please note that the location should not have spaces in it, neither start with a dash or underscore.

Deploy OSRM maps to a separate node pool

When using the platform to deploy OSRM maps, it’s possible to configure them to be deployed to a specific node pool.

To do that, the configuration below can be set.

maps:

isolated: trueThe maps will be deployed in the nodes with the label ai.timefold/maps-dedicated: true.

Maps officially supported by Timefold

Map image containers are built by Timefold for given region and provided to users via Timefold container registry (ghcr.io/timefoldai). Please contact Timefold support in case given region is not yet available

|

Below table specifies what maps are currently supported and available for Timefold Platform.

Map instances hardware requirements

Some maps can have demanding hardware requirements. Because each map has a different sizing, it can be hard to define the resources needed for a map instance.

Memory Requirements

The memory of a map instance can be calculated by the sum of the memory of the map when idle (the memory it needs to start without doing any calculation) and the memory it uses to answer the requests at a given point in time.

\$"MaxMemMap" = "MemMapIdle" + max("MemUsedForRequests")\$

For the map memory when idle, the values are as follows:

| Region | Idle Memory (MB) |

|---|---|

uk |

3460 |

greater-london |

190 |

britain-and-ireland |

5500 |

us-georgia |

1245 |

us-southern-california |

1290 |

belgium |

500 |

australia |

1512 |

dach |

6125 |

india |

11130 |

gcc-states |

1455 |

ontario |

700 |

poland |

1875 |

us-northeast |

4435 |

netherlands |

800 |

sweden |

1090 |

us-south |

13631 |

us-north-carolina |

1415 |

italy-nord-ovest |

692 |

us-midwest |

9300 |

us-west |

6752 |

us-hawaii |

85 |

us-puerto-rico |

152 |

The memory used for requests varies according to the number of locations requested and the number of simultaneous requests.

The table below expresses the approximated memory used per request depending on the number of locations:

| Number of locations | Memory used (MB) |

|---|---|

From 0 to 100 |

0 |

From 100 to 200 |

10 |

From 200 to 600 |

40 |

From 600 to 1100 |

200 |

From 1100 to 2000 |

500 |

From 2000 to 4000 |

2000 |

From 4000 to 6000 |

4300 |

From 6000 to 8000 |

7600 |

From 8000 to 10000 |

12000 |

From 10000 to 12000 |

17000 |

From 12000 to 14000 |

23000 |

From 14000 to 16000 |

30000 |

From 16000 to 18000 |

38000 |

From 18000 to 20000 |

47000 |

Currently, our maps do not support requests with more than 20 000 locations. The maximum configured number of locations

is set to 10 000 by default, but can be increased (up to 20 000) using the parameter maxLocationsInRequest when configuring each map.

If simultaneous requests are made to the maps instances and there is not enough memory to calculate them,

they will be queued internally until there is memory available for them to be calculated or until they timeout

(timeout can be set in the parameter maps.osrm.retry.maxDurationMinutes).

CPU Requirements

The map instances use one core per request.

It’s possible to enable autoscaling of maps instances based on the percentage of CPU used by the instances,

using the properties defined in maps.osrm.autoscaling (for details read Deploy maps integration section).

Maximum Thread count per job

It is recommended to configure the maximum thread count for each job in the platform. This value is based on the maximum number of cores in the nodes of the Kubernetes cluster that are running solver jobs. If unset, the default maximum thread count is 1. It can be set with the following configuration.

solver:

maxThreadCount: 8Each model also has its own maximum thread count that defines to what concurrency level model still improves performance.

In addition, self hosted is single tenant with on default plan which does not set any limits but that can lead to single run taking up all memory of Kubernetes node.

Limit of memory that single run can take can be set with the following configuration.

solver:

# max amount of memory (in MB) assigned as limit on pod

maxMemory: 16384Maximum running time for job

This setting is global value that is meant to prevent long running jobs, that exceed regular execution time. This mainly protects platform resources from being used by misbehaving runs (infinite loop, never ending solver phase and alike).

Value is expected to be ISO8600 duration and by default it is set to 24 hours (PT24H)

It can be set with the following configuration.

models:

maximumRunningDuration: PT24HConfiguring an external map provider

It’s possible to create an external map provider and configure the platform to use it. To see more details about the implementation of an external map provider, see the documentation for the maps service.

To configure it, set the external url of the provider in the configuration as described below.

maps:

externalProvider: "http://localhost:5000"To be used in a run, the map provider must be configured in the subscription configuration of the tenant.

The configured map provider must be set to external-provider.

The mapsConfiguration should be set as the following:

{

...

"mapsConfiguration": {

"provider": "external-provider",

"location": "location"

},

...

}The location is arbitrary and will be sent in the request to the external provider without additional processing.

Upgrade

In case Timefold Platform is already installed then upgrade should be performed.

This guide assumes that the installation has been performed accordingly to the documentation about Timefold Platform, by that orbit-values.yaml file is already available.

|

Preparation

Depending on the target version of the platform you might need to follow preparation steps. Some might be required some might be optional depending on your platform installation.

0.42.0

Strict OIDC configuration

Strict OIDC configuration requires valid OIDC setup as it initiates the connection to OIDC provider at start. If you don’t have proper configuration of OIDC disable OIDC via values file

oauth:

enabled: false

# certs:

# issuer:Disable oauth2-proxy component

oauth2-proxy component acts as OIDC proxy to allow to initiate and manage authentication sessions. In pure headless environments this component does not bring too much value as it creates new sessions for each request anyway. In addition, when using opaque tokens it actually won’t allow authenticate as it requires JWT tokens.

In situations where opaque tokens are used it is recommended to turn off the proxy component.

oauth:

proxy: false0.41.0 version

Added possibility of choosing transport type used for map. It can be set with the following configuration.

maps:

osrm:

locations:

- region: us-georgia

transportType: car0.40.0 version

Added possibility of configuring the max distance from road (in meters) for a map before OSRM returns no route. It can be set with the following configuration.

maps:

osrm:

maxDistanceFromRoad: 100000.39.0 version

Diminished returns termination has been added. It is used by default when no unimproved spent limit is configured. The termination is configured globally and can be overridden when submitting a problem dataset.

Optionally, values file can be extended with models configuration that allows to set the global termination settings. Changes in this version:

-

terminationUnimprovedSpentLimitis now optional. WhenterminationUnimprovedSpentLimitis null, diminished returns termination is used (defaults to PT5M) expressed as ISO-8601 duration

models:

terminationSpentLimit: PT30M

terminationMaximumSpentLimit: PT60M

terminationUnimprovedSpentLimit:

terminationMaximumUnimprovedSpentLimit: PT5MIt was also added the possibility of configuring a global prefix for all buckets created by the platform, using the configuration below.

storage:

remote:

# Add a prefix to all created buckets (must be smaller than 28 characters)

bucketPrefix: "prefix-timefold-buckets-"0.36.0 version

Added option to control maximum runtime of model pods.

models:

maximumRunningDuration: PT24HSelf hosted environments run with single tenant and default plan which does not set any restrictions on max resources assigned when solving. This can cause unexpected behaviour like single solver run can take up all the memory of the node and be eventually evicted. To prevent this at installation level, limit can be set to restrict max amount of memory (in MB)

solver:

# max amount of memory (in MB) assigned as limit on pod

maxMemory: 163840.30.0 version

Added option to deploy maps to an isolated node pool. See External map provider section for more details.

maps:

isolated: true0.28.0 version

Added option to configure an external map provider. See External map provider section for more details.

maps:

externalProvider: "http://localhost:5000"0.27.0 version

Renamed the following replica count properties from Maps and Insights services:

- From insightsReplicaCount, to insights.replicaCount

- From mapsServiceReplicaCount, to maps.replicaCount

0.26.0 version

Added option to configure external OSRM instances by location. The location should not contain any spaces.

maps:

osrm:

externalLocationsUrl:

customLocation1: "http://localhost:8080"

customLocation2: "http://localhost:8081"0.25.0 version

Maps service component has been enhanced with scalability capabilities backed by Kubernetes StatefulSet resource. It can be enabled via maps.scalable property that needs to be set to true to deploy maps service as statefulset.

maps:

scalable: true

Enabling scalable maps service will recreate persistent volume currently being used. This means that any location sets stored will be removed. For that reason, default value is set to false to keep the backward compatible mode, though it is recommended to switch to scalable mode as it allows to run maps service in multi-replica mode which ensures high availability and fault tolerance.

|

End-to-end encryption for Timefold components has been provided. See End to end encryption section for more details.

0.23.0 version

Removed the property storage.remote.expiration, that it was used to configure the expiration of datasets in remote storage.

This configurations is now part of each plan configuration.

Removed the property models.runOn, that was used to configure dedicated workers for solver, model and tenant.

This configurations is now part of each plan configuration.

0.22.0 version

Added default configurations for notifications webhooks read and connect timeout duration. If not set, the default is 5 seconds. These can be set with the following configuration:

notifications:

webhook:

connectTimeout: PT5S

readTimeout: PT5S0.20.0 version

Added the possibility of configuring the maximum thread count for each job in the platform. This value is based on the maximum number of cores in the nodes of the Kubernetes cluster that are running solver jobs. It is highly recommended that this configuration is set. If not set, defaults to 1. It can be set with the following configuration.

solver:

maxThreadCount: 80.16.0 version

-

The location sets endpoints were changed to using hyphens instead of camel case to separate the words (

/api/admin/v1/tenants/{tenantId}/maps/location-sets). -

It’s possible to configure data cleanup policy to remove data sets after

xdays. This can be set viaorbit-values.yamlfile with following sectionstorage: # remote storage that is used to store solver data sets remote: # specifies expiration of data sets in remote storage in days - once elapsed data will be removed. When set to 0 no expiration expiration: 7This feature is not supported for Azure storage as it requires higher privileges to configure life cycle rules. Instead user should configure it directly in Azure Portal or CLI. -

Configuration bucket name (used to store internal data of the platform) is now configurable. This is required to be updated for S3 and Google Cloud Storage setups as bucket names must be globally unique in those object stores. Before upgrading make sure you create uniquely named bucket (using company name or domain name) and copy all files from

timefold-orbit-configurationbucket into the new one. Next set the name of the configuration bucket in theorbit-values.yamlfile under following section:storage: remote: name: YOUR_CUSTOM_BUCKET_NAMEreplacing

YOUR_CUSTOM_BUCKET_NAMEwith the name of your choice. -

The order of the locations in a location set is now not relevant to the distance calculation.

0.15.0 version

The location sets configuration for the maps cache, that was introduced in version 0.13.0, was removed from helm

and now it’s done via an API (/api/admin/v1/tenants/{tenantId}/maps/locationSets).

0.14.0 version

Fine-grained control over the termination of the solver has been added. The termination is configured globally and can be overridden when submitting a problem dataset. Configuring unimproved spent limit now allows to avoid running the solver when the score is not improving anymore. Setting maximum limits provides a safety net to prevent users from setting too high termination values.

Optionally, values file can be extended with models configuration that allows to set the termination settings:

-

terminationSpentLimit- maximum duration to keep the solver running - defaults to PT30M - expressed as ISO-8601 duration -

terminationMaximumSpentLimit- maximum allowed limit for dataset-defined spentLimit - defaults to PT60M - expressed as ISO-8601 duration -

terminationUnimprovedSpentLimit- if the score has not improved during this period terminate the solver - defaults to PT5M - expressed as ISO-8601 duration -

terminationMaximumUnimprovedSpentLimit- maximum allowed limit for dataset-defined unimprovedSpentLimit - defaults to PT5M - expressed as ISO-8601 duration

models:

terminationSpentLimit: PT30M

terminationMaximumSpentLimit: PT60M

terminationUnimprovedSpentLimit: PT5M

terminationMaximumUnimprovedSpentLimit: PT5MIn case you want to opt out of the unimproved spent limit, configure it with the same value as the maximum spent limit:

models:

terminationSpentLimit: PT30M

terminationMaximumSpentLimit: PT60M

terminationUnimprovedSpentLimit: PT60M

terminationMaximumUnimprovedSpentLimit: PT60M0.13.0 version

When using maps, it’s now possible to precalculate location sets. When doing so, the location sets will be precalculated according to a refresh interval, and will be stored in a cache by their name. For details read Deploy maps integration section.

0.12.0 version

When using OSRM maps, requests are now throttled based on the memory available in each Map.

Some optional configurations were added to control the requests for the maps instances.

In addition, the configurations osrmOptions and osrmLocations were replaced by osrm.options and osrm.locations, respectively.

For details read Deploy maps integration section.

Optionally, values file can be extended with models configuration that allows to set time to live for solver worker pod:

-

runtimeTimeToLive- duration to keep the solver worker pod after solving has finished - expressed as ISO-8601 duration -

idleRuntimeTimeToLive- duration to keep the solver worker pod without solving being activated (e.g. when performing recommended fit) - defaults to 10 minutes - expressed as ISO-8601 duration

models:

runtimeTimeToLive: PT1M

idleRuntimeTimeToLive: PT30M0.10.0 version

When using maps there is new component provided called maps-service that is automatically deployed when enabled via values file. In addition, when using OSRM dedicated maps can also be deployed. For details read Deploy maps integration section.

Maps service is equipped with caching layer that requires persistent volume in Kubernetes cluster for best performance and functionality. In case persistent volumes are not available it should be disabled in values file with maps.cache.persistentVolume: null property

|

0.8.0 version

There are no required preparation steps.

Optionally, values file can be extended with models configuration that allows to set default execution parameters.

models:

terminationSpentLimit: 30m

# IMPORTANT: when setting resources, all must be set

resources:

limits:

# max CPU allowed for the platform to be used

cpu: 1

# max memory allowed for the platform to be used

memory: 512Mi

requests:

# guaranteed CPU for the platform to be used

cpu: 1

# guaranteed memory for the platform to be used

memory: 512Mi

runOn:

# will only run on nodes that are marked as solver workers

solverWorkers: false

# will only run on nodes that are marked with model id

modelDedicated: false

# will only run on nodes that are marked with tenant id

tenantDedicated: falseExecution

Login to Timefold Helm Chart repository to gain access to Timefold Platform chart.

Use user as username and token provided by Timefold support as password.

helm registry login ghcr.io/timefoldaiOnce all preparation steps are completed, Timefold Platform can be upgraded with following command:

Amazon S3 based installation

Upgrade command requires following environment variables to be set:

-

NAMESPACE - Kubernetes namespace where platform should be installed

-

OAUTH_CLIENT_SECRET - client secret of the application used for this environment

-

TOKEN - GitHub access token to container registry provided by Timefold support

-

LICENSE - Timefold Platform license string provided by Timefold support

-

AWS_ACCESS_KEY - AWS access key

-

AWS_ACCESS_SECRET - AWS access secret

Once successfully logged in with environment variables set, issue upgrade command via helm:

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE" \

--set secrets.data.awsAccessKey="$AWS_ACCESS_KEY" \

--set secrets.data.awsAccessSecret="$AWS_ACCESS_SECRET"

Above command will install (or upgrade) latest released version of the Timefold Platform.

To install specific version append --version A.B.C to the command where A.B.C is the version number to be used.

|

Google Cloud Storage based installation

Upgrade command requires following environment variables to be set:

-

NAMESPACE - Kubernetes namespace where platform should be installed

-

OAUTH_CLIENT_SECRET - client secret of the application used for this environment

-

TOKEN - GitHub access token to container registry provided by Timefold support

-

LICENSE - Timefold Platform license string provided by Timefold support

-

GCS_SERVICE_ACCOUNT - Service Account key for Google Cloud Storage

-

GCP_PROJECT_ID - Google Cloud Project id where storage is created

Once successfully logged in with environment variables set, issue upgrade command via helm:

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE" \

--set secrets.stringData.serviceAccountKey="$GCS_SERVICE_ACCOUNT" \

--set secrets.stringData.projectId="$GCP_PROJECT_ID"

Above command will install (or upgrade) latest released version of the Timefold Platform.

To install specific version append --version A.B.C to the command where A.B.C is the version number to be used.

|

Azure BlobStore based installation

Upgrade command requires following environment variables to be set:

-

NAMESPACE - Kubernetes namespace where platform should be installed

-

OAUTH_CLIENT_SECRET - client secret of the application used for this environment

-

TOKEN - GitHub access token to container registry provided by Timefold support

-

LICENSE - Timefold Platform license string provided by Timefold support

-

AZ_CONNECTION_STRING - Azure BlobStore connection string

Once successfully logged in with environment variables set, issue upgrade command via helm:

helm upgrade --install --atomic --namespace $NAMESPACE timefold-orbit oci://ghcr.io/timefoldai/timefold-orbit-platform \

-f orbit-values.yaml \

--create-namespace \

--set namespace=$NAMESPACE \

--set oauth.clientSecret="$OAUTH_CLIENT_SECRET" \

--set image.registry.auth="$TOKEN" \

--set license="$LICENSE" \

--set secrets.data.azureStoreConnectionString="$AZ_CONNECTION_STRING"

Above command will install (or upgrade) latest released version of the Timefold Platform.

To install specific version append --version A.B.C to the command where A.B.C is the version number to be used.

|