API usage

To interact with the Timefold APIs you need to authenticate with a valid API key. In the Timefold Platform you can configure API access and learn about the API specifications.

API keys

Generating API keys

When you are logged in, click on the dropdown at the top right next to your username. Click on Tenant settings. In the menu on the left hand side click API Keys to access the API Keys available for your tenant.

-

Click Create API Key to generate a new API Key.

-

Select All Models or Selected Models and specify which models the key has access to.

We recommend creating one key for each model you use. -

Select All Permissions or Selected Permissions and specify which permissions the key has.

The Read permission can be used with the GET method to retrieve existing information. It cannot be used, for instance, to request recommendations as this involves creating the recommendations. -

Specify an expiry duration.

If you don’t pick a date, the key will expire after 30 days. -

Give the API a descriptive name.

-

Click Add API Key.

All API Keys generated for a tenant are visible for all administrators of the tenant. An API Key doesn’t have any user context and is purely connected to the tenant (and optionally to the models of the tenant).

API key permissions

The table below lists which permissions can call which endpoints and will help decide which API keys require which permissions.

When deciding on which permissions to grant an API key, we recommend following the Principle of Least Privilege and only providing the permissions required for the task the API key has been created for.

| Method | Endpoint | Create | Read | Update | Delete |

|---|---|---|---|---|---|

POST |

/{version}/{resource} |

Yes |

No |

No |

No |

DELETE |

/{version}/{resource}/{id} |

No |

No |

Yes |

No |

GET |

/{version}/{resource}/{id} |

Yes |

Yes |

Yes |

Yes |

POST |

/{version}/{resource}/{id} |

No |

No |

Yes |

No |

PUT |

/{version}/{resource}/{id} |

No |

No |

Yes |

Yes |

POST |

/{version}/{resource}/{id}/from-input |

Yes |

No |

No |

No |

POST |

/{version}/{resource}/{id}/from-patch |

Yes |

No |

No |

No |

PATCH |

/{version}/{resource}/{id}/metadata |

No |

No |

Yes |

No |

POST |

/{version}/{resource}/{id}/new-run |

Yes |

No |

No |

No |

DELETE |

/{version}/{resource}/{id}/purge |

No |

No |

No |

Yes |

PATCH |

/{version}/{resource}/{id}/run |

No |

No |

Yes |

No |

GET |

/{version}/{resource}/{id}/{subresource} |

Yes |

Yes |

Yes |

Yes |

GET |

/{version}/{resource}/{id}/* |

Yes |

Yes |

Yes |

Yes |

POST |

/{version}/{resource}/recommendations/ |

Yes |

No |

No |

No |

POST |

/{version}/{resource}/{id}/recommendations/ |

Yes |

No |

No |

No |

POST |

/{version}/{resource}/score-analysis |

Yes |

No |

No |

No |

GET |

/{version}/{resource}/{id}/score-analysis |

Yes |

Yes |

Yes |

Yes |

Revoking API keys

From the API Keys overview table you can delete existing keys. This can be done if an API Key is no longer needed, or if an API Key has leaked. Once an API Key is deleted, all subsequent API calls where the key is used will fail.

Common integrations and recommended permissions

Below are common ways customers integrate with the API and the minimal permissions we recommend for each flow:

-

Data ingestion (ETL/ELT pipelines): Use Selected Models scoped to the destination model and grant Create to POST datasets plus Read to verify ingestion succeeded. Avoid Update/Delete; rely on source-of-truth systems for corrections.

-

Automated solves (schedulers/CI jobs): Grant Create to submit new datasets/runs and Read to poll results. Keep keys model-scoped so a single automation job cannot trigger unrelated models.

-

Dashboards and BI tools: Provision Read only for the models they need to visualize. This prevents dashboard users from mutating datasets while still allowing score analysis retrieval.

-

Operational back-office tools (light editing of metadata): Grant Read and Update for the specific model resources they maintain. Do not include Create/Delete unless the tool must add/remove datasets.

-

Testing or staging sandboxes: Keep the same principle of least privilege but add short expirations; restrict to staging models and only add Delete if the pipeline must routinely purge test data.

Timefold Model API’s

Each model on the Timefold Platform has its own API which conforms with the OpenAPI standard.

Explore the model API in the Timefold Platform UI

You can explore the API in Timefold Platform.

-

Log in to app.timefold.ai.

-

Select the model.

-

Select OpenAPI Spec.

Model API Endpoints

To understand the API endpoints, select an endpoint to expand it. You will see:

-

Parameters: The parameters required by the API endpoint.

-

Request body: For POST endpoints, you can view example values that are accepted as part of the JSON input and the model schema.

-

Response: The responses the API endpoints will return, example values, and the schema.

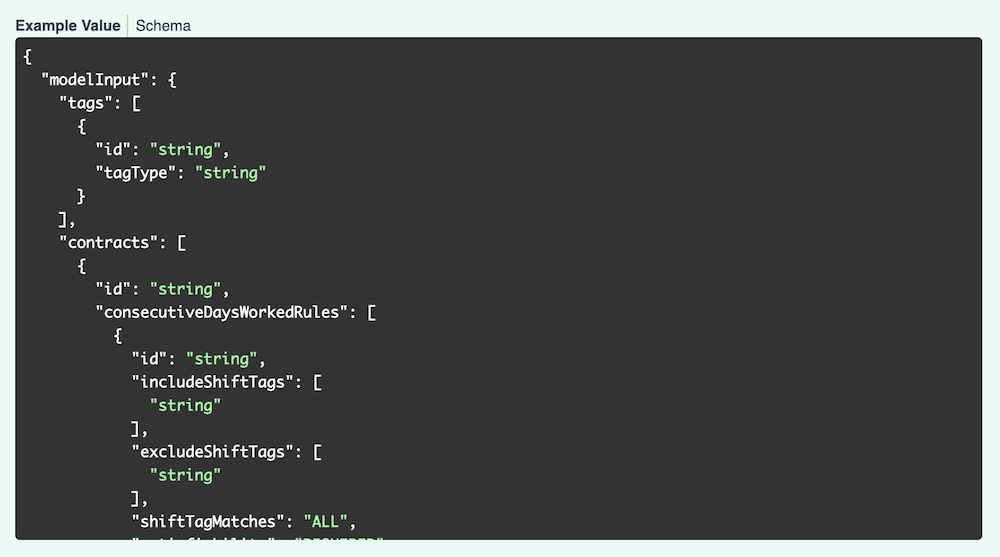

The Example Value tab for each endpoint show an example of the JSON for that endpoint:

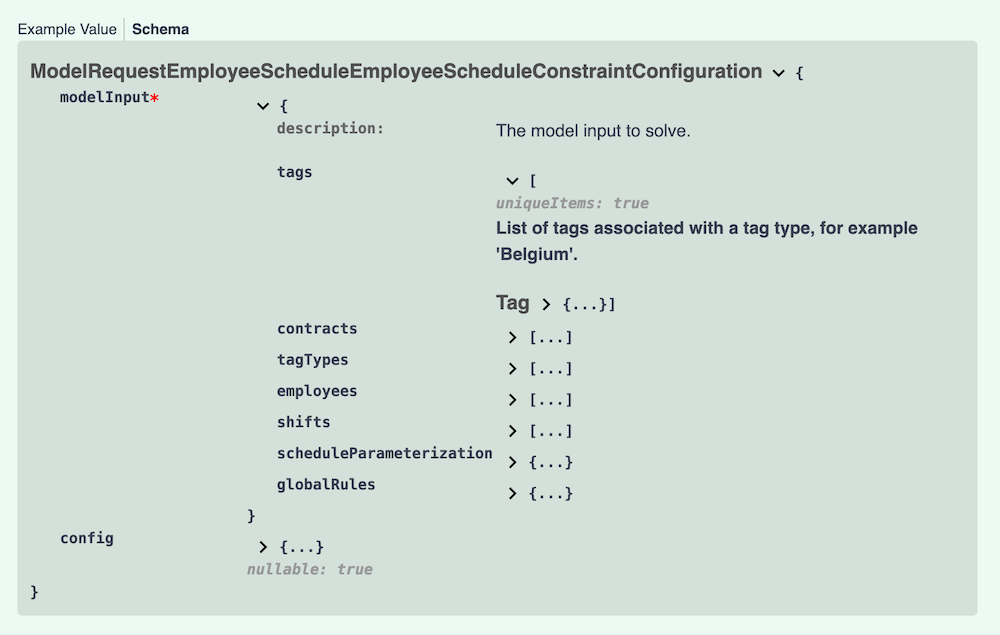

The Schema tab shows the values, their types, and a description. Each field can be expanded to view related information.

Testing the model APIs

To test the API functionality in the platform, you first need to authorize the API.

-

Via the Tenant drop-down in the top right, select "Tenant Settings"

-

Navigate to the API Keys section.

-

Create or copy an API key that has permissions for the model you want to test.

-

Navigate back to the model you want to test and select OpenAPI Spec.

-

Click Authorize and paste the API key you just copied.

Demo datasets

Demo datasets that demonstrate the functionality are available. To view the list of demo datasets:

-

Expand the POST /v1/demo-data endpoint.

-

Click Try it out, then click Execute.

You will see the Curl instructions to request the dataset and the request URL.

You will also see the response to the request with the list of available datasets.

To download one of the listed datasets:

-

Expand the POST /v1/demo-data/{demoDataId} endpoint.

-

Click Try it out, enter the name of the dataset, for instance, "BASIC", and click Execute.

In the response body, you will see the dataset. Copy the dataset to use with subsequent endpoints.

You can also use your own dataset to test the functionality.

POST the dataset to request a solution.

The solution can be seen by navigating to the model’s Plans page and selecting the dataset. Alternatively, you can GET the solution from the API by providing the dataset ID from the previous post operation.

Solver statuses

A dataset can be in one of several states:

| Status | Description | API value |

|---|---|---|

Dataset created |

A new dataset has been created from a new input dataset or a patch to an existing dataset. |

|

Dataset validated |

The dataset has been validated. |

|

Dataset invalid |

The submitted dataset was invalid. |

|

Dataset computed |

The score analysis and KPIs for the dataset have been computed before solving. This can be useful for datasets that already include partial solutions (e.g. employees assigned to shifts, vehicles assigned to visits). |

|

Scheduled |

The data is in the queue to be solved. |

|

Started |

The input dataset is being converted into the planning problem and augmented with additional information, such as distance matrix (if applicable). |

|

Solving |

The planning problem is currently being solved. |

|

Incomplete |

A full solution was not found before the run was terminated. |

|

Completed |

Solving has completed and no further solution will be generated. |

|

Failed |

An error has occurred and solving was unsuccessful. |

|

Read more about Dataset lifecycle.

Dealing with large datasets

Timefold is designed to support large-scale datasets. To help you work efficiently with substantial input files, the platform and individual models enforce certain limits and provide mechanisms to optimize data transfer.

Platform-level payload limits

The Timefold APIs enforce global payload size limits:

-

Up to 100 MB when compressed (gzip)

-

Up to 2 GB when uncompressed

We strongly recommend compressing your request payload. To do this, send your data as binary with gzip compression and include the header Content-Encoding: gzip. Likewise, if you set Accept-Encoding: gzip the API will return compressed responses when possible.

|

Model-level limits

In addition to the platform-level limits, some models enforce their own dataset restrictions to ensure optimal performance and system stability.

Working with large-scale datasets

We are committed to supporting real-world, enterprise-scale planning problems. If your dataset exceeds either the platform payload limit or a model-specific limit, or if you anticipate scaling beyond these thresholds, please contact Timefold Support. We are happy to discuss your use case and explore options to support larger workloads or provide tailored solutions.