Comparing datasets (preview)

|

This guide describes functionality that is currently available as a preview feature. If you’d like early access to this feature, please Contact us. |

The Comparison UI of the Timefold Platform helps you assess and compare multiple datasets at a high level, enabling better-informed decisions about your planning problems. Whether you’re testing new goals, configurations, or different scenarios, the comparison view brings clarity to the impact of each change.

When to use the Comparison UI

The comparison UI supports several key use cases:

Goal alignment

Solve the same planning problem with different optimization goals, and compare the outcomes. This helps you understand the trade-offs between competing priorities and make informed decisions about what matters most.

Learn more: Balancing different optimization goals.

Benchmarking

Compare solutions under different termination settings, or hardware configurations. This helps you assess the quality and speed of the model and identify potential areas for improvement.

What-if scenarios & scenario testing

Make simulated changes to your planning problem, such as increasing workload or modifying resource capacity, and compare the outcomes. This helps you make strategic operational decisions with confidence.

Follow-up over time

Compare multiple datasets for the same business unit over time, to track how workload or service quality evolves. This helps you monitor operations and spot trends.

|

The Comparison UI is designed for high-level analysis. It’s not intended for comparing specific employee metrics or resource assignments. For detailed plan visualizations, use the Dataset Visualizations instead. |

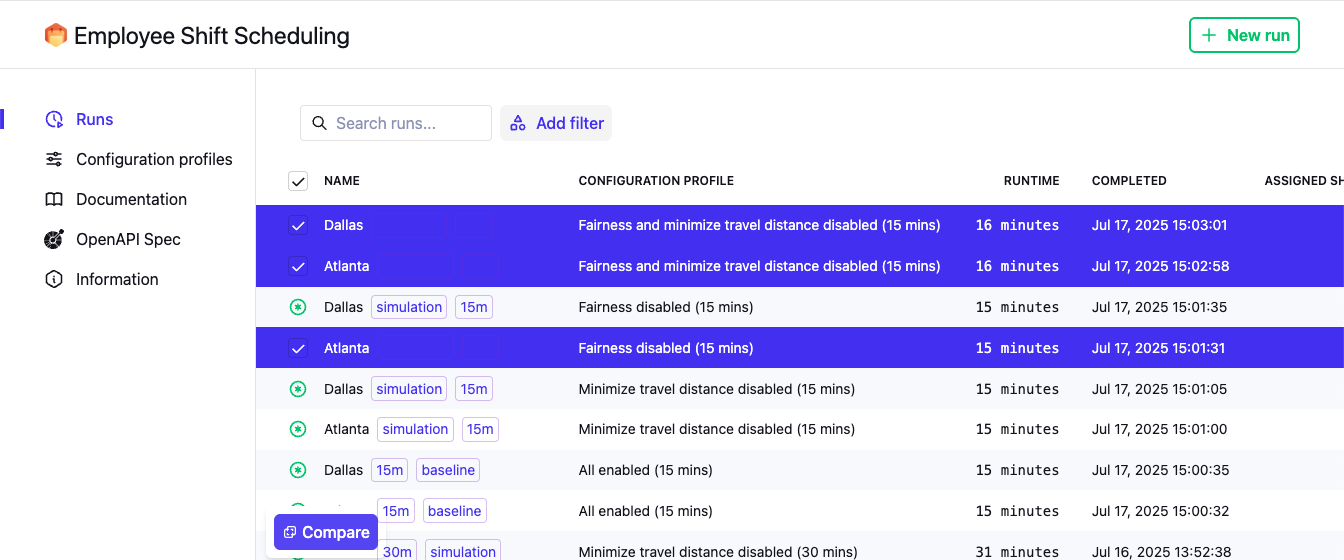

Starting a comparison

You always start a comparison from a Model Plans Overview. Select two or more of your datasets and click Compare at the bottom.

From the Comparison UI you can:

-

Re-order columns by dragging the handle icon in the column header.

-

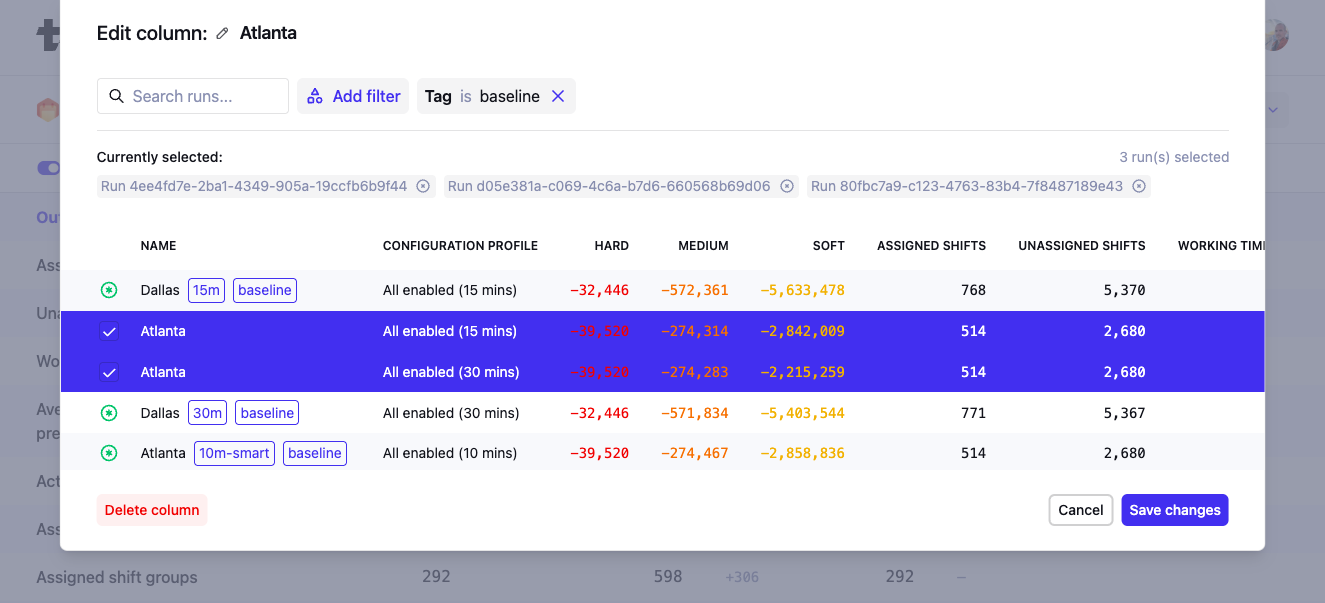

Edit or remove a column by clicking the pen icon in the column header to change which datasets are used for the column, and give columns a different name.

-

Click Add column at the top right of the table to add more columns to the comparison.

| The order in which you select datasets from the Model Plans Overview will decide the order of the columns in the Comparison UI. To avoid dragging, make sure you select your baseline dataset first. |

How comparisons work

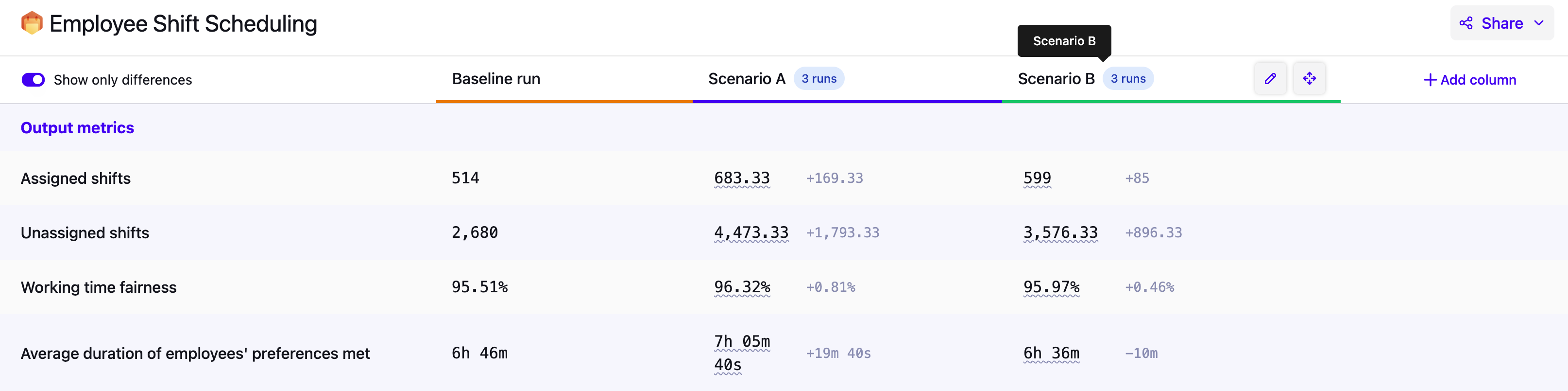

Each column in the comparison represents either:

-

A single dataset: Best when you want to see detailed metrics for each dataset.

-

A group of datasets: Best when you want to look for patterns and trends across multiple datasets. When a column represents multiple datasets, the values shown will be averages. Click the pen icon on a column and select multiple datasets to aggregate them in a single column.

|

Use tags to categorize your datasets and scenarios. When editing a column in the Comparison UI you can then filter on these tags to quickly find the relevant datasets. Learn more here: Searching and categorizing datasets for auditability. |

You can compare up to:

-

10 columns in total.

-

50 datasets per column.

What we compare

Each comparison shows different types of metrics to help you interpret and analyze your results.

-

Output metrics:

These are the main results of your planning problem and are usually the most important to compare. They are ordered by the priorities defined by your model. -

Input metrics:

These describe the size or scope of the problem (e.g. number of tasks, employees, vehicles). They help you put the output metrics in context. -

Score:

The hard, medium, and soft scores of the datasets. -

Calculation metrics:

These relate to the solving process (e.g. number of moves, move evaluation speed), and help you assess solver performance. -

Dataset info:

Displays metadata about each dataset, such as the Dataset ID and dates.

Options and tips

-

Only show differences:

Toggle "Only show differences" to hide rows where metrics are the same across all columns. -

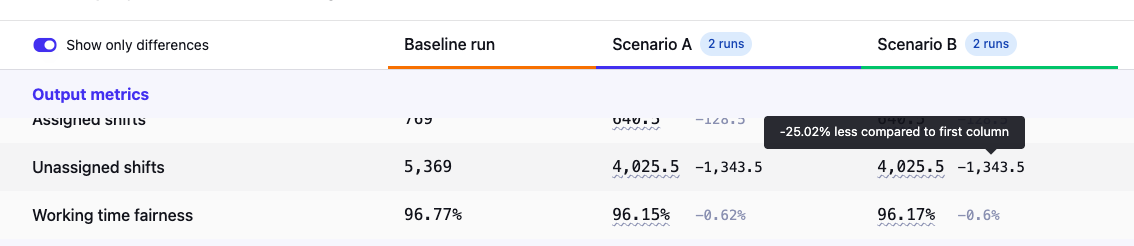

Difference versus baseline:

Differences from the first column are shown in dimmed text. To compare against a different baseline, reorder the columns. Hover over a difference to see it as a percentage.

-

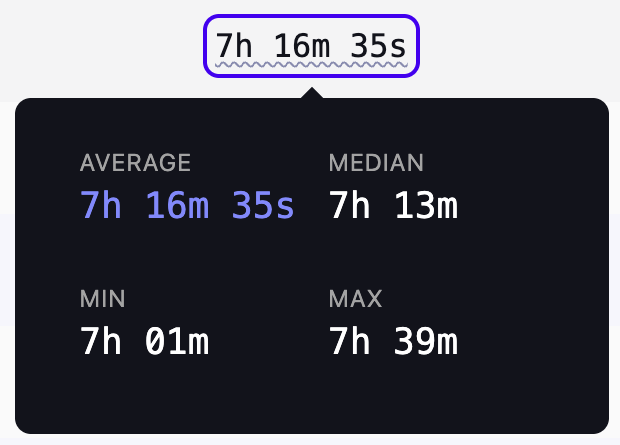

Distribution tooltips:

If you’re comparing a group of datasets, you’ll see underlined average values. Hover to reveal the min, max, median, and average.

-

Sharing results:

You can generate a PDF export of your comparison. (We recommend using landscape orientation for better readability.)

Example questions the Comparison UI can answer

Here are some example questions the Comparison UI can help you answer:

-

How did the staffing efficiency change this month compared to last?

-

What would happen if we added more part-time employees?

-

How does the total driving time change when we prioritize early deliveries more?

-

Is the solution quality significantly better when using a longer solving time?

-

What’s the impact on service SLA’s if we lost a service team?

Preview status

|

This feature is currently available as a preview feature. If you’d like early access to this feature, please Contact us. |