Validating an optimized plan with Explainable AI

Introduction

When technology generates a plan, be it an employee shift schedule, a technician’s route, or a production sequence - it may be near-optimal according to the PlanningAI’s logic, but that’s only half the battle. The real test comes when a human planner or employee opens that plan and decides whether to trust it. If people won’t trust the plan, they won’t use it. When a plan isn’t used, the efficiency gains disappear…

At Timefold, we build tools that empower planners, not replace them. That’s why we invest in making our AI explainable, transparent, and collaborative. The goal isn’t to ask people to blindly accept the output of a black box, it’s to support them with the best information, while keeping them in control.

Why trust matters

Optimizing planning problems is no trivial task. Our optimization models explore vast numbers of possible combinations to propose plans that balance many competing objectives: employee preferences, fairness, costs, business rules, and more.

But even the best plan needs buy-in.

In order to trust a plan, people need to understand why certain decisions were made.

Planning software needs to:

-

Generate correct and feasible plans. The proposed schedule must respect all hard rules like legal constraints, skill requirements, and working time regulations. If a plan breaks these fundamental rules, it doesn’t matter how explainable it is. That’s why planning optimization requires more than just language models; it needs to be based on robust solvers that guarantee correctness.

-

Explain why a plan looks the way it does. Users need to understand the reasoning behind assignments, tradeoffs, and exceptions. Explainability builds confidence. A plan shouldn’t feel like a black box.

-

Explain failure. Sometimes, no feasible plan or assignment exists. In those moments, the software must clearly explain why. Which constraint was too tight? What resource was missing? What combination of inputs led to a dead end?

-

Allow for adaptation. Trust grows when users can make adjustments to the plan and instantly see how the system adapts.

How Timefold helps build trust

Timefold includes several features designed for explainability and adaptation.

Explainability

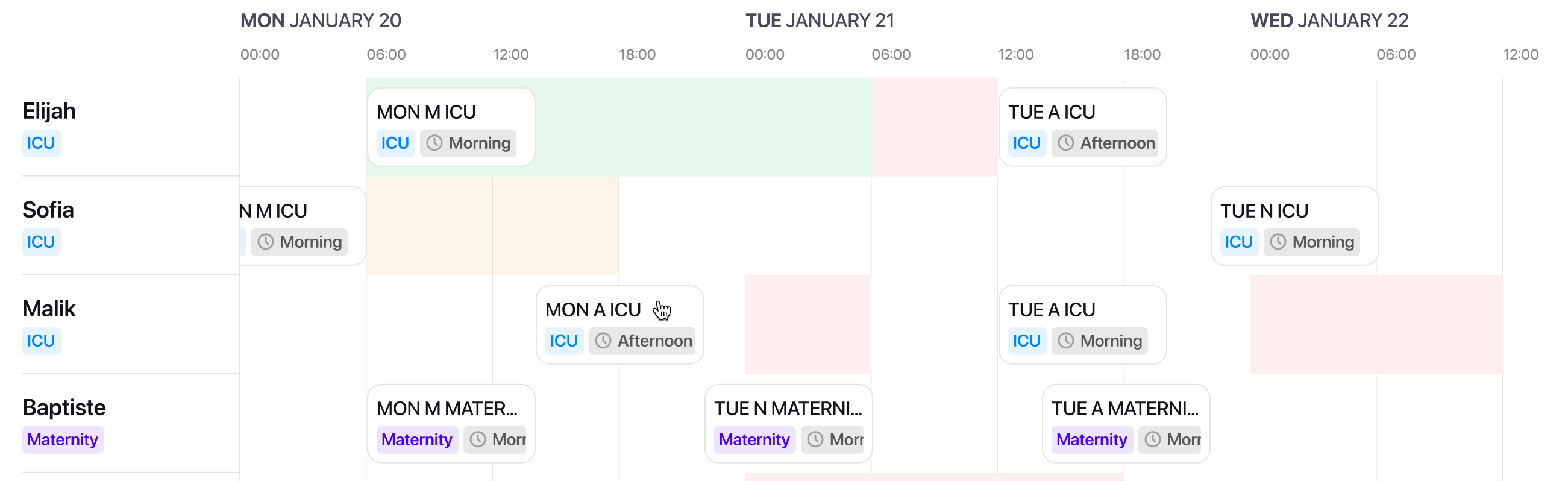

Plan visualizations

Our Platform UI gives planners a quick way to spot-check the result by visualizing each computed plan. You can zoom in on a specific employee, day, or assignment and understand how that piece fits into the bigger picture. The view doesn’t just show a calendar or route, it also highlights whether preferences are met, indicates dependencies, and more.

| Timefold’s Visualization UI isn’t meant to be the primary tool for distributing the schedule to employees, it’s a tool for planners to build confidence in the plan’s logic. |

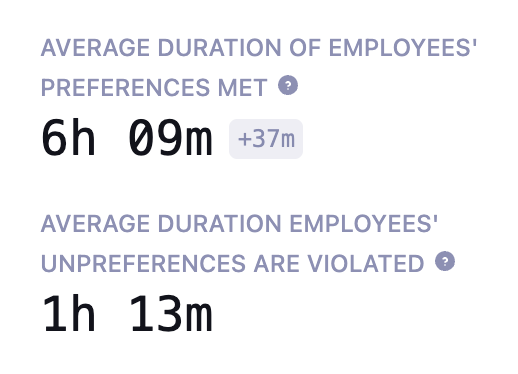

Assessing plan quality with real-world metrics

Behind every plan is a set of optimization goals, such as, minimize overtime, maximize employee preferences, balance workloads, etc. While optimization engines typically use scores to drive the solution, each Timefold Model surfaces real-world metrics for each of these goals so planners can assess the plan’s quality from a business perspective.

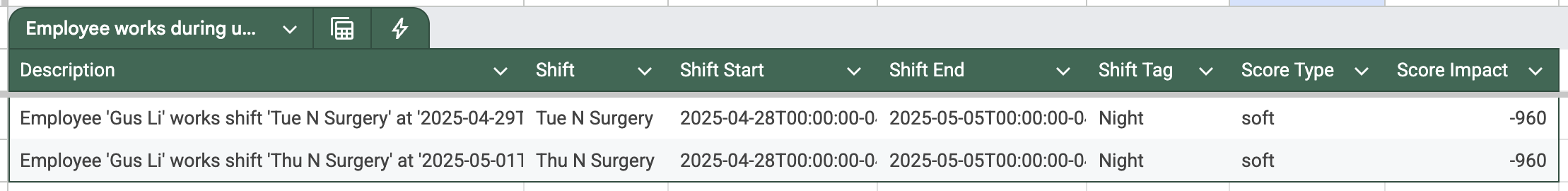

Analyze the solution per constraint

When a constraint can’t be fully satisfied, we explain why.

For example:

-

Employee preferences couldn’t always be honored? We justify with examples of which preferences for which employees.

-

Exact balancing of hours worked between employees isn’t achieved? We show the distribution between employees.

This kind of transparency transforms “Why didn’t it do X?” into “I see why X wasn’t feasible or optimal.”

Click “Export” below the Score Analysis table on a dataset, to download these justifications.

Explaining unassigned items

If a task or shift remains unassigned, you can call the Recommendation APIs to see why certain employees couldn’t take on the assignment. Maybe they lack the required skill or are already fully booked? For examples of specific planning models, see the Field Service Routing Recommendations guide or Employee Shift Scheduling Recommendations guide.

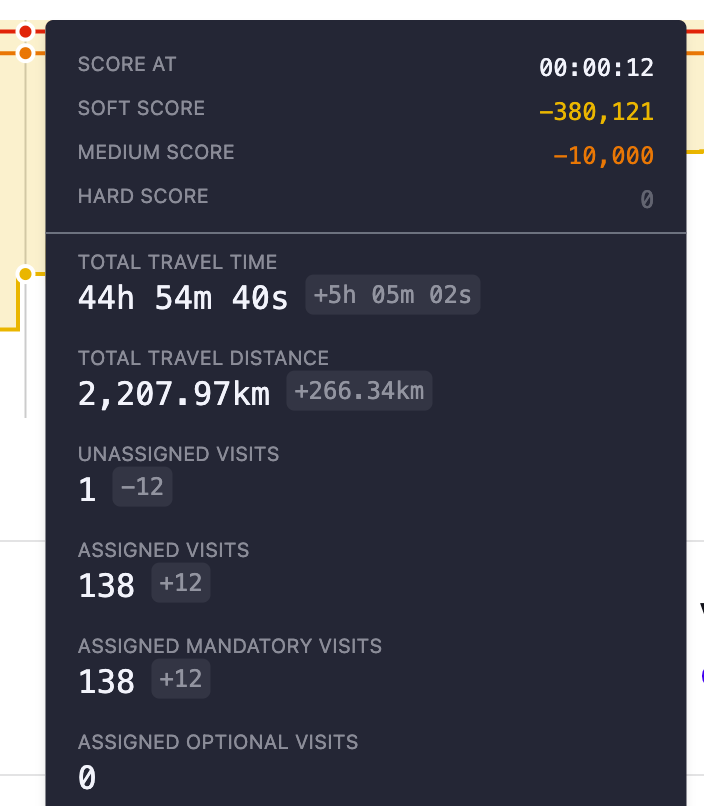

Tradeoff transparency during solving

In a multi-objective optimization profile tradeoffs are inevitable. Value comes from seeing them.

The score graphs in the Timefold Platform UI show how each metric evolves during solving. You can see how improving one metric - like honored employee preferences - can impact others - like costs. This transparency allows you to trust the plan not because it’s perfect, but because you understand the reasoning and tradeoffs behind it.

Adjusting the plan

Plan around manual assignments and exceptions

Sometimes, a planner knows certain parts of the plan must happen. Our “pinning” feature lets them fix specific assignments manually. The solver will plan around them.

This is useful when:

-

Some constraints are not yet modeled.

-

Last-minute changes or exceptions arise.

-

Emergency situations happen in which even hard constraints must be broken.

Test the impact of manual changes

Sometimes a planner spots an assignment that just doesn’t feel right, an assignment that seems unfair or counterintuitive. Instead of guessing whether a manual change would help or hurt, our system lets them test it.

By using the Model’s APIs, you can propose a change (like reassigning a task to a different person) and instantly see:

-

If it’s feasible.

-

How it affects different constraint scores.

-

How it affects the top level metrics of a plan, and the metrics of the affected employees.

Goal alignment: Redefining what “better” means

In many planning problems, there’s no single objective, there are multiple goals, often in tension with one another. The challenge is deciding which to prioritize, for instance, employee satisfaction, cost efficiency, or fairness.

With Timefold, Planners can adjust the relative weights of these goals to better reflect business priorities. We call this goal alignment, and it can be achieved in the Platform UI - no coding required. This enables planners to not just accept the plan they’re given, but to steer the optimization toward the results they care about most, which might not always be the default.

Learn more in our Balancing different optimization goals guide.

Tips for building trust

Tips for building trust with operational planners

These ideas help operational planners gain confidence in the system, by exploring, testing, and understanding how and why the plan behaves the way it does.

-

Test constraint impact: Temporarily disable a soft constraint to see how much it influences the result. This helps identify which goals are shaping the plan most.

-

Explore edge cases: Change availability or assignments for key employees to see how the plan adapts. This reveals how sensitive the model is to different inputs.

-

Use pinning for gradual adoption: When migrating from a manual process or another system, import the existing plan and pin parts of it. Timefold can score and optimize around what the planner already trusts and gradually take on more responsibility over time.

Tips for building trust with employees

Employees (technicians, nurses, drivers, etc.) are the ones most impacted by the plan. If they don’t understand or trust it, they may reject it, ignore it, or feel frustrated.

A thoughtfully designed UI can help. By using Timefold’s APIs, you can expose key information about why the employee’s plan looks the way it does and allow employees to safely explore alternatives.

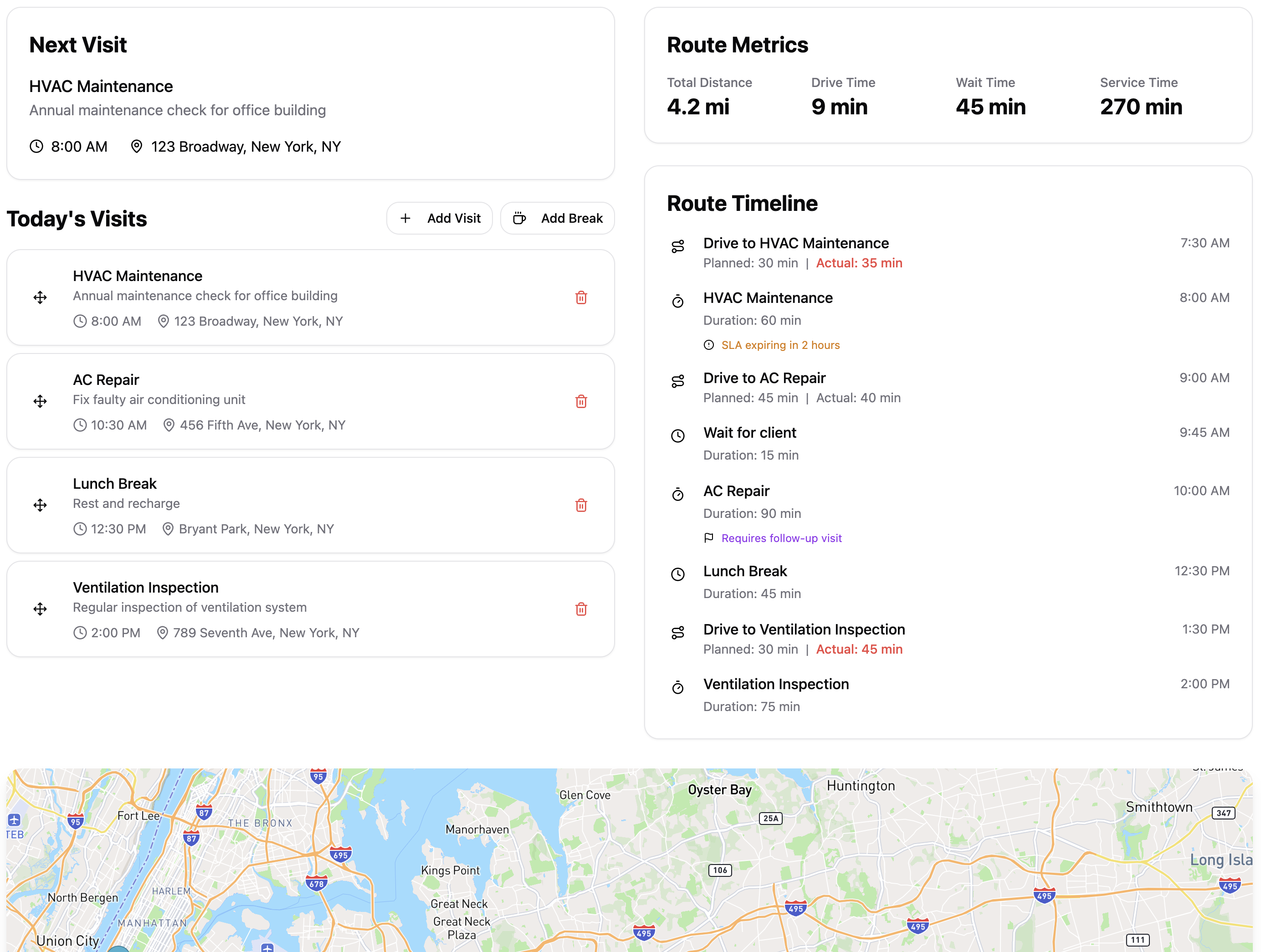

The UI example below helps employees understand why the planned order makes sense:

-

Clear overview and breakdown of driving time and distance for the employee.

-

SLA indicators highlight tasks that must be completed early (indicated in orange).

-

Dependency warnings show how tasks are connected, and what might break if one isn’t completed (indicated in purple).

In the Example UI employees can drag and reorder tasks in the “Today’s visits” section to explore alternatives. As they do, the impact of the change is immediately previewed.

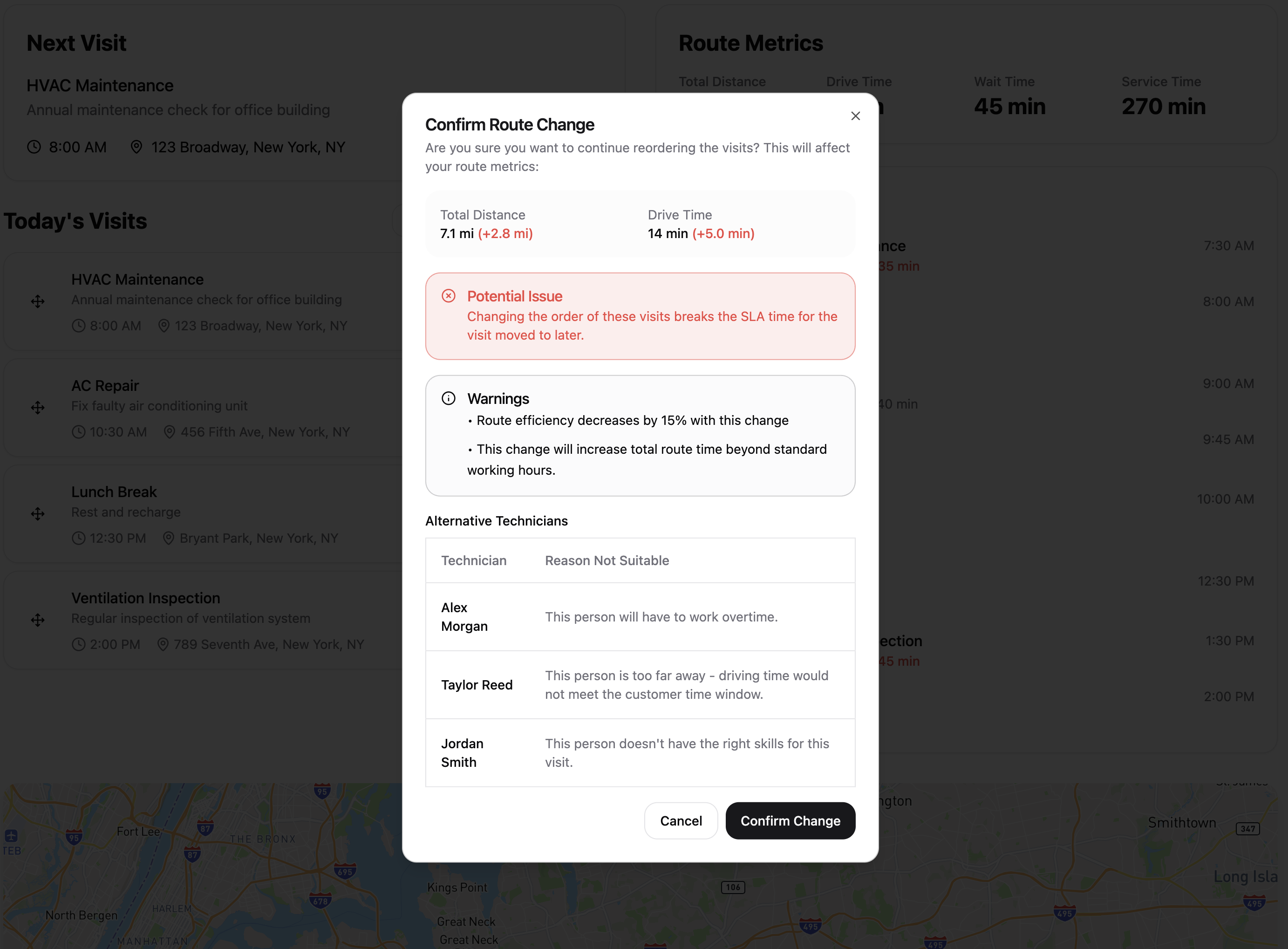

The screenshot shows that changing the order negatively impacts the driving time. The system also suggests alternative employees, along with explanations of why they may be less optimal or infeasible (e.g. lacking the right skills or requiring overtime).

All of this insight is powered by Timefold’s APIs. By surfacing it in your employee-facing UI, you can build trust, and reduce friction on the ground.

Make plans conversational

With today’s technology, it’s also possible to surface this information in a more conversational way. Several of our customers have been successful using AI agents that let employees ask questions or flag issues. Even small features (like a “Why me?” or “Can this be swapped?” button) can make the plan feel more transparent and collaborative.

What’s next?

We’re actively investing in trust-building features for operational planners. Here are a few things on our radar:

-

Efficiency X-ray: A reporting tool to reveal bottlenecks in plans, like scarce skills or limiting constraints. This tool will help you understand not just what the plan is, but why it couldn’t be better. Learn more about uncovering inefficiencies.

-

What-if scenario exploration: Already possible today, we’re working on UI tools to make it easier to test different scenarios — no coding required. Try “What happens if you had two more employees with a specific skill?” Or “What is the impact of longer lunch breaks?”

-

Easily explorable justifications: We want to improve how users interact with constraint justifications: moving beyond downloadable JSON files toward a more powerful API and UI that let users explore and query them more intuitively.

These improvements will give planners even more control and insight, and thus trust.

Conclusion

The Timefold Platform allows planners to validate their intuition with data, without giving up control. We act as a collaborative assistant that supports better decisions rather than a rigid system that resists human input.

Our software doesn’t replace planners, it supports them. It gives recommendations backed by transparent logic, allows for tweaks and overrides, and shows the “why” behind every suggestion. Because when you understand why a plan looks the way it does, and you have the ability to adapt it, you’re far more likely to trust it, use it and benefit from its optimizations.