Score calculation

We could implement an easy score calculator that uses a functional approach:

-

Java

private int doNotAssignAnn() {

return schedule.getShifts().stream()

.filter(Shift::isEmployeeAnn)

.mapToInt(shift -> -1)

.sum();

}However, that scales poorly because it doesn’t do an incremental calculation:

When the planning variable of a single Shift changes, to recalculate the score,

the normal Streams API has to execute the entire stream from scratch.

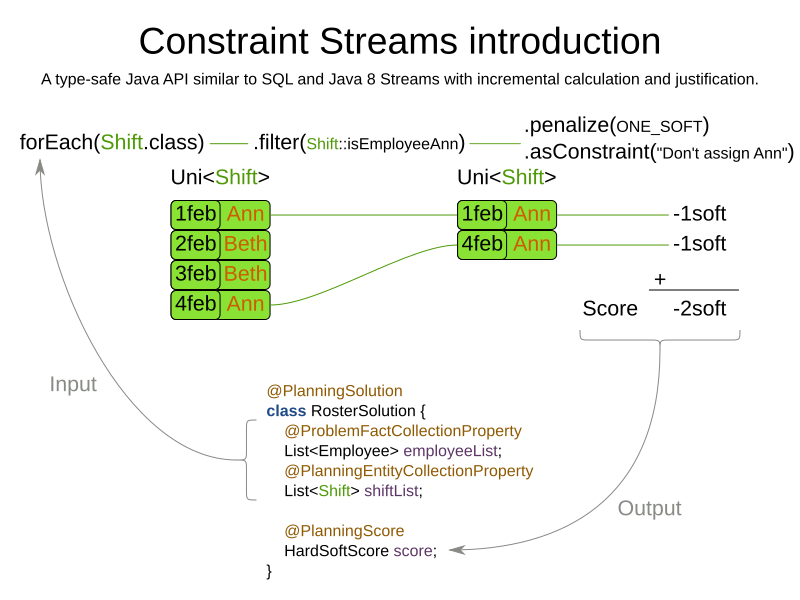

1. Introducing Constraint Streams

Constraint streams are a functional programming form of incremental score calculation that is easy to read, write and debug. The API should feel familiar if you’re familiar with Java Streams or SQL.

The Constraint Streams API enables you to write similar code in a language of your choice, while reaping the performance benefits of incremental score calculation. This is an example of the same code we’ve shown above, only using the Constraint Streams API:

-

Java

private Constraint doNotAssignAnn(ConstraintFactory factory) {

return factory.forEach(Shift.class)

.filter(Shift::isEmployeeAnn)

.penalize(HardSoftScore.ONE_SOFT)

.asConstraint("Don't assign Ann");

}This constraint stream iterates over all instances of class Shift in the problem facts and

planning entities

in the planning problem.

It finds every Shift which is assigned to employee Ann and for every such instance (also called a match), it adds a

soft penalty of 1 to the overall score.

The following figure illustrates this process on a problem with 4 different shifts:

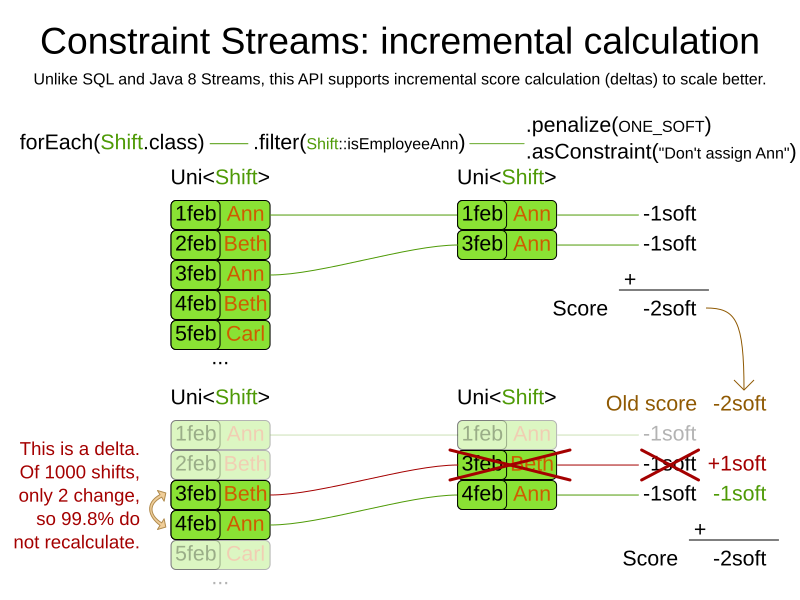

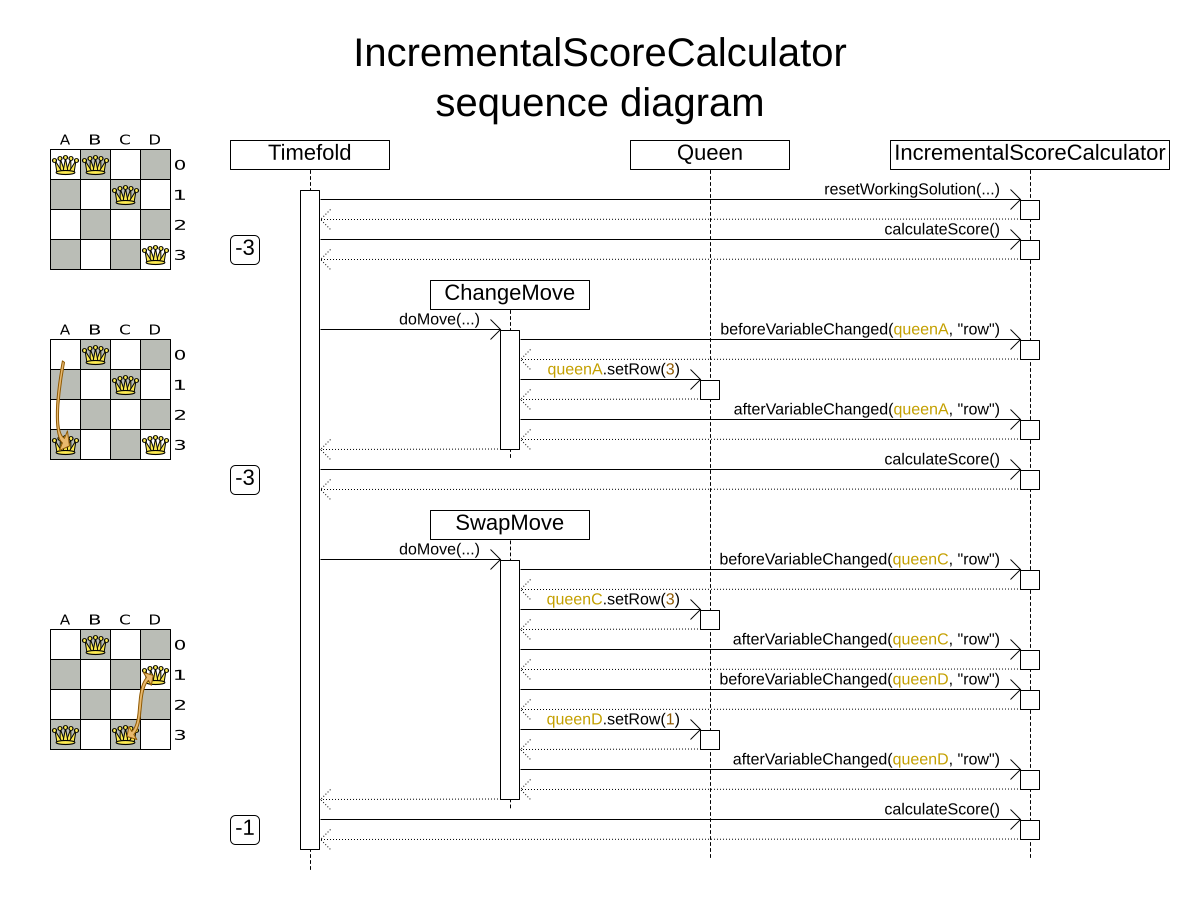

If any of the instances change during solving, the constraint stream automatically detects the change and only recalculates the minimum necessary portion of the problem that is affected by the change. The following figure illustrates this incremental score calculation:

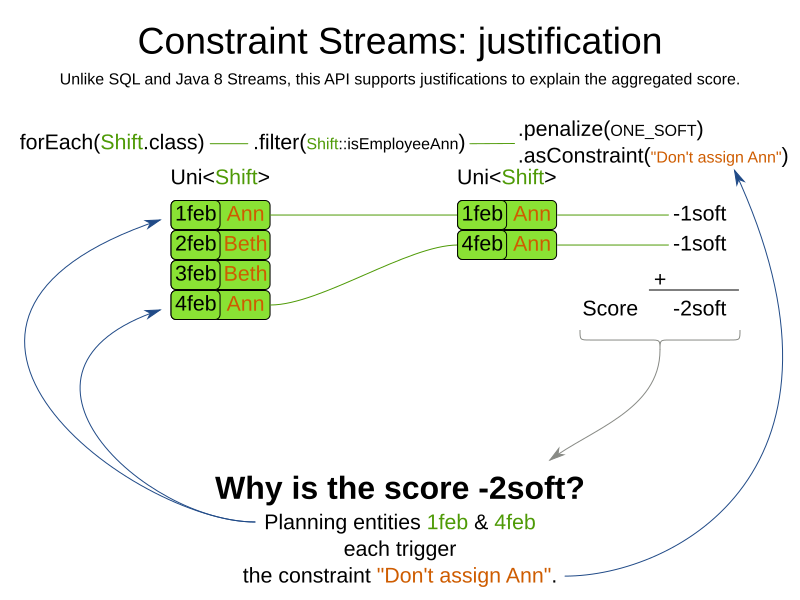

Constraint Streams API also has advanced support for score explanation through custom justifications and indictments.

2. Creating a constraint stream

To use the Constraint Streams API in your project, first write a ConstraintProvider implementation similar

to the following example.

-

Java

public class MyConstraintProvider implements ConstraintProvider {

@Override

public Constraint[] defineConstraints(ConstraintFactory factory) {

return new Constraint[] {

penalizeEveryShift(factory)

};

}

private Constraint penalizeEveryShift(ConstraintFactory factory) {

return factory.forEach(Shift.class)

.penalize(HardSoftScore.ONE_SOFT)

.asConstraint("Penalize a shift");

}

}|

This example contains one constraint, |

Add the following code to your solver configuration:

<solver xmlns="https://timefold.ai/xsd/solver" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="https://timefold.ai/xsd/solver https://timefold.ai/xsd/solver/solver.xsd">

<scoreDirectorFactory>

<constraintProviderClass>org.acme.schooltimetabling.solver.TimetableConstraintProvider</constraintProviderClass>

</scoreDirectorFactory>

...

</solver>3. Constraint stream cardinality

Constraint stream cardinality is a measure of how many objects a single constraint match consists of.

The simplest constraint stream has a cardinality of 1, meaning each constraint match only consists of 1 object.

Therefore, it is called a UniConstraintStream:

-

Java

private Constraint doNotAssignAnn(ConstraintFactory factory) {

return factory.forEach(Shift.class) // Returns UniStream<Shift>.

...

}Some constraint stream building blocks can increase stream cardinality, such as join or groupBy:

-

Java

private Constraint doNotAssignAnn(ConstraintFactory factory) {

return factory.forEach(Shift.class) // Returns Uni<Shift>.

.join(Employee.class) // Returns Bi<Shift, Employee>.

.join(DayOff.class) // Returns Tri<Shift, Employee, DayOff>.

.join(Country.class) // Returns Quad<Shift, Employee, DayOff, Country>.

...

}The latter can also decrease stream cardinality:

-

Java

private Constraint doNotAssignAnn(ConstraintFactory factory) {

return factory.forEach(Shift.class) // Returns UniStream<Shift>.

.join(Employee.class) // Returns BiStream<Shift, Employee>.

.groupBy((shift, employee) -> employee) // Returns UniStream<Employee>.

...

}The following constraint stream cardinalities are currently supported:

Cardinality |

Prefix |

Defining interface |

1 |

Uni |

|

2 |

Bi |

|

3 |

Tri |

|

4 |

Quad |

|

3.1. Achieving higher cardinalities

Timefold Solver currently does not support constraint stream cardinalities higher than 4. However, with tuple mapping effectively infinite cardinality is possible:

-

Java

private Constraint pentaStreamExample(ConstraintFactory factory) {

return factory.forEach(Shift.class) // UniConstraintStream<Shift>

.join(Shift.class) // BiConstraintStream<Shift, Shift>

.join(Shift.class) // TriConstraintStream<Shift, Shift, Shift>

.join(Shift.class) // QuadConstraintStream<Shift, Shift, Shift, Shift>

.map(MyTuple::of) // UniConstraintStream<MyTuple<Shift, Shift, Shift, Shift>>

.join(Shift.class) // BiConstraintStream<MyTuple<Shift, Shift, Shift, Shift>, Shift>

... // This BiConstraintStream carries 5 Shift elements.

}|

Timefold Solver does not provide any tuple implementations out of the box. It’s recommended to use one of the freely available 3rd party implementations. Should a custom implementation be necessary, see guidelines for mapping functions. |

4. Building blocks

Constraint streams are chains of different operations, called building blocks.

Each constraint stream starts with a forEach(…) building block and is terminated by either a penalty or a reward.

The following example shows the simplest possible constraint stream:

-

Java

private Constraint penalizeInitializedShifts(ConstraintFactory factory) {

return factory.forEach(Shift.class)

.penalize(HardSoftScore.ONE_SOFT)

.asConstraint("Initialized shift");

}This constraint stream penalizes each known and initialized instance of Shift.

4.1. ForEach

The .forEach(T) building block selects every T instance that

is in a problem fact collection

or a planning entity collection

that is consistent

and has no null genuine planning variables.

To include instances with a null genuine planning variable,

or planning values

not assigned to any planning list variable,

replace the forEach() building block by forEachIncludingUnassigned():

-

Java

private Constraint penalizeAllConsistentShifts(ConstraintFactory factory) {

return factory.forEachIncludingUnassigned(Shift.class)

.penalize(HardSoftScore.ONE_SOFT)

.asConstraint("A shift");

}To include inconsistent and unassigned instances, replace the forEach() building block by forEachUnfiltered():

-

Java

private Constraint penalizeAllShifts(ConstraintFactory factory) {

return factory.forEachUnfiltered(Shift.class)

.penalize(HardSoftScore.ONE_SOFT)

.asConstraint("A shift");

}In cases utilizing the planning list variable, you may want to include an inverse relation shadow variable to maximize performance of your constraints.

|

The |

4.2. Penalties and rewards

The purpose of constraint streams is to build up a score for a solution.

To do this, every constraint stream must contain a call to either a penalize() or a reward()

building block.

The penalize() building block makes the score worse and the reward() building block improves the score.

Each constraint stream is then terminated by calling asConstraint() method, which finally builds the constraint.

Constraints have several components:

-

Constraint name is the human-readable descriptive name for the constraint, which must be unique within the entire

ConstraintProviderimplementation. -

Constraint weight is a constant score value indicating how much every breach of the constraint affects the score. Valid examples include

SimpleScore.ONE,HardSoftScore.ONE_HARDandHardMediumSoftScore.of(1, 2, 3). -

Constraint match weigher is an optional function indicating how many times the constraint weight should be applied in the score. The penalty or reward score impact is the constraint weight multiplied by the match weight. The default value is

1.

|

Constraints with zero constraint weight are automatically disabled and do not impose any performance penalty. |

|

The constraint has another component: a constraint package. It has no practical impact on the solver and it has been deprecated. We recommend you don’t use constraint packages; the solver will choose a suitable default value. |

The Constraint Streams API supports many different types of penalties. Browse the API in your IDE for the full list of method overloads. Here are some examples:

-

Simple penalty (

penalize(SimpleScore.ONE)) makes the score worse by1per every match in the constraint stream. The score type must be the same type as used on the@PlanningScoreannotated member on the planning solution. -

Dynamic penalty (

penalize(SimpleScore.ONE, Shift::getHours)) makes the score worse by the number of hours in every matchingShiftin the constraint stream. This is an example of using a constraint match weigher. -

Configurable penalty (

penalizeConfigurable()) makes the score worse using constraint weights defined in constraint configuration. -

Configurable dynamic penalty(

penalizeConfigurable(Shift::getHours)) makes the score worse using constraint weights defined in constraint configuration, multiplied by the number of hours in every matchingShiftin the constraint stream.

By replacing the keyword penalize by reward in the name of these building blocks, you get operations that

affect score in the opposite direction.

4.2.1. Customizing justifications and indictments

One of important Timefold Solver features is its ability to explain the score of solutions it produced

through the use of justifications and indictments.

By default, each constraint is justified with ai.timefold.solver.core.api.score.stream.DefaultConstraintJustification,

and the final tuple makes up the indicted objects.

For example, in the following constraint, the indicted objects will be of type Vehicle and an Integer:

-

Java

protected Constraint vehicleCapacity(ConstraintFactory factory) {

return factory.forEach(Customer.class)

.filter(customer -> customer.getVehicle() != null)

.groupBy(Customer::getVehicle, sum(Customer::getDemand))

.filter((vehicle, demand) -> demand > vehicle.getCapacity())

.penalizeLong(HardSoftLongScore.ONE_HARD,

(vehicle, demand) -> demand - vehicle.getCapacity())

.asConstraint("vehicleCapacity");

}For the purposes of creating a heat map, the Vehicle is very important, but the naked Integer carries no semantics.

We can remove it by providing the indictWith(…) method with a custom indictment mapping:

-

Java

protected Constraint vehicleCapacity(ConstraintFactory factory) {

return factory.forEach(Customer.class)

.filter(customer -> customer.getVehicle() != null)

.groupBy(Customer::getVehicle, sum(Customer::getDemand))

.filter((vehicle, demand) -> demand > vehicle.getCapacity())

.penalizeLong(HardSoftLongScore.ONE_HARD,

(vehicle, demand) -> demand - vehicle.getCapacity())

.indictWith((vehicle, demand) -> List.of(vehicle))

.asConstraint("vehicleCapacity");

}The same mechanism can also be used to transform any of the indicted objects to any other object.

To present the constraint matches to the user or to send them over the wire where they can be further processed,

use the justifyWith(…) method to provide a custom constraint justification:

-

Java

protected Constraint vehicleCapacity(ConstraintFactory factory) {

return factory.forEach(Customer.class)

.filter(customer -> customer.getVehicle() != null)

.groupBy(Customer::getVehicle, sum(Customer::getDemand))

.filter((vehicle, demand) -> demand > vehicle.getCapacity())

.penalizeLong(HardSoftLongScore.ONE_HARD,

(vehicle, demand) -> demand - vehicle.getCapacity())

.justifyWith((vehicle, demand, score) ->

new VehicleDemandOveruse(vehicle, demand, score))

.indictWith((vehicle, demand) -> List.of(vehicle))

.asConstraint("vehicleCapacity");

}VehicleDemandOveruse is a custom type you have to implement.

You have complete control over the type, its name or methods exposed.

If you choose to decorate it with the proper annotations,

you will be able to send it over HTTP or store it in a database.

The only limitation is that it must implement the ai.timefold.solver.core.api.score.stream.ConstraintJustification marker interface.

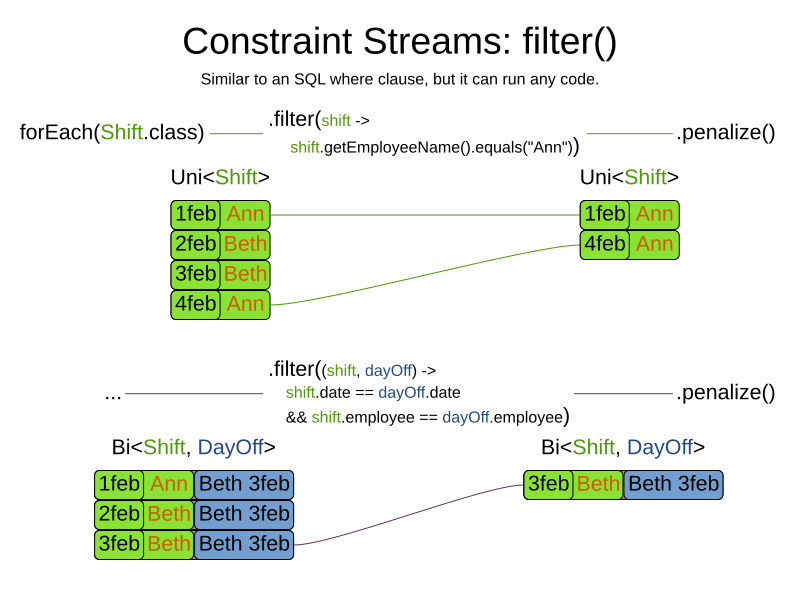

4.3. Filtering

Filtering enables you to reduce the number of constraint matches in your stream.

It first enumerates all constraint matches and then applies a predicate to filter some matches out.

The predicate is a function that only returns true if the match is to continue in the stream.

The following constraint stream removes all of Beth’s shifts from all Shift matches:

-

Java

private Constraint penalizeAnnShifts(ConstraintFactory factory) {

return factory.forEach(Shift.class)

.filter(shift -> shift.getEmployeeName().equals("Ann"))

.penalize(SimpleScore.ONE)

.asConstraint("Ann's shift");

}The following example retrieves a list of shifts where an employee has asked for a day off from a bi-constraint match

of Shift and DayOff:

-

Java

private Constraint penalizeShiftsOnOffDays(ConstraintFactory factory) {

return factory.forEach(Shift.class)

.join(DayOff.class)

.filter((shift, dayOff) -> shift.date == dayOff.date && shift.employee == dayOff.employee)

.penalize(SimpleScore.ONE)

.asConstraint("Shift on an off-day");

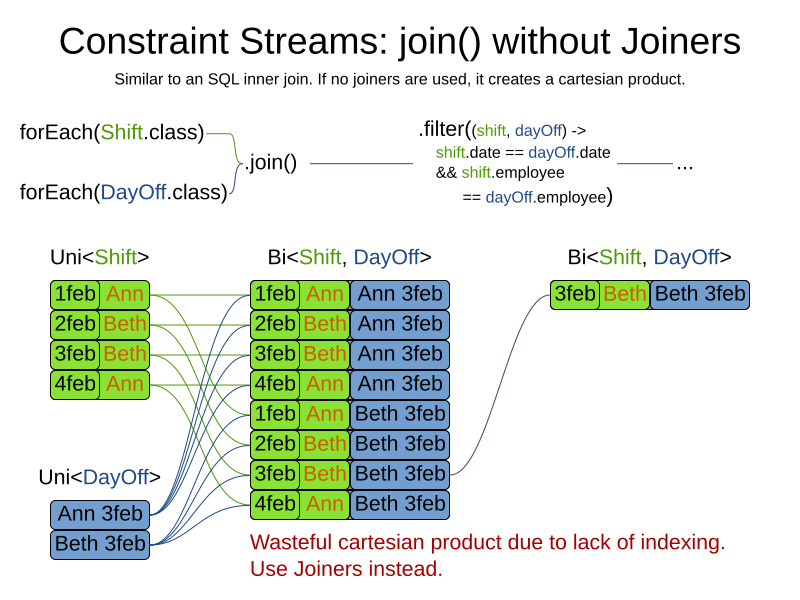

}The following figure illustrates both these examples:

|

For performance reasons, using the join building block with the appropriate |

The following functions are required for filtering constraint streams of different cardinality:

Cardinality |

Filtering Predicate |

1 |

|

2 |

|

3 |

|

4 |

|

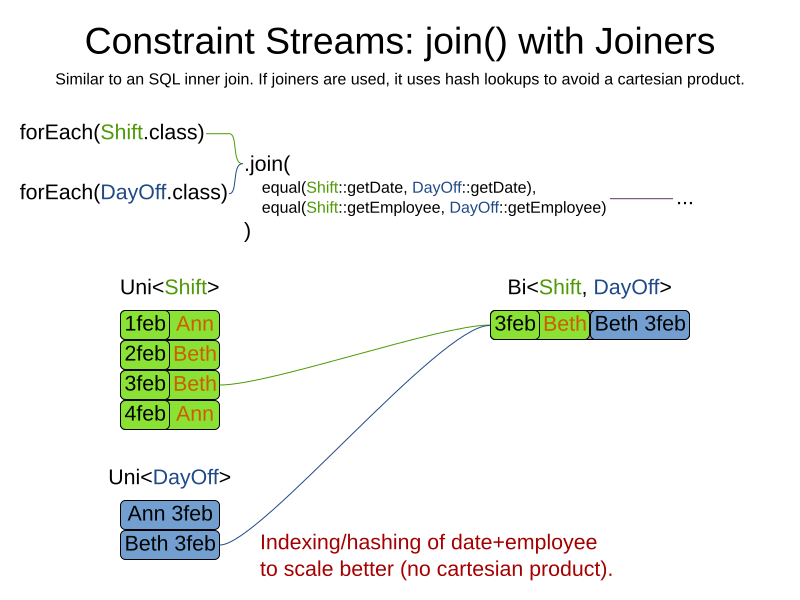

4.4. Joining

Joining is a way to increase stream cardinality and it is similar to the inner join

operation in SQL. As the following figure illustrates,

a join() creates a cartesian product of the streams being joined:

Doing this is inefficient if the resulting stream contains a lot of constraint matches that need to be filtered out immediately.

Instead, use a Joiner condition to restrict the joined matches only to those that are interesting:

For example:

-

Java

import static ai.timefold.solver.core.api.score.stream.Joiners.*;

...

private Constraint shiftOnDayOff(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Shift.class)

.join(DayOff.class,

equal(Shift::getDate, DayOff::getDate),

equal(Shift::getEmployee, DayOff::getEmployee))

.penalize(HardSoftScore.ONE_HARD)

.asConstraint("Shift on an off-day");

}Through the Joiners class, the following Joiner conditions are supported to join two streams,

pairing a match from each side:

-

equal(): the paired matches have a property that areequals(). This relies onhashCode(). -

greaterThan(),greaterThanOrEqual(),lessThan()andlessThanOrEqual(): the paired matches have aComparableproperty following the prescribed ordering. -

overlapping(): the paired matches have two properties (a start and an end property) of the sameComparabletype that both represent an interval which overlap.

All Joiners methods have an overloaded method to use the same property of the same class on both stream sides.

For example, calling equal(Shift::getEmployee) is the same as calling equal(Shift::getEmployee, Shift::getEmployee).

|

If the other stream might match multiple times, but it must only impact the score once (for each element of the original stream), use ifExists instead. It does not create cartesian products and therefore generally performs better. |

4.4.1. Evaluation of multiple joiners

When using multiple joiners, there are some important considerations to keep in mind. Consider the following example:

-

Java

factory.forEach(VehicleShift.class)

.join(Visit.class,

Joiners.equal(Function.identity(), Visit::getVehicleShift), // Visit's VehicleShift is not null...

Joiners.lessThan(

vehicleShift -> vehicleShift.getMaxTravelTime(),

visit -> visit.getVehicleShift().getMaxTravelTime() // ... yet NPE may be thrown here.

))When indexing joiners (such as equal() and lessThan()) check their indexes,

they take the input tuple and create a set of keys that will enter the index.

These keys are different for the left and right side of the joiner.

In the above example, from the left side,

the key is [VehicleShift instance && result of calling VehicleShift.getMaxTravelTime()].

(Using the first mapping function of each joiner.)

From the right side,

the key is [the result of calling Visit.getVehicleShift() && result of calling Visit.getVehicleShift().getMaxTravelTime()].

(Using the second mapping function of each joiner.)

However, both of the key mapping functions are calculated independently of the other,

and therefore the lessThan() joiner’s mapping functions will be executed even in cases

when the equal() joiner would not match.

This leads to a NullPointerException being thrown in the example above,

where the lessThan() joiner’s mapping functions are executed on a Visit instance

that has a null vehicleShift property which wasn’t (yet) filtered out by the equal() joiner.

The filtering only happens inside the joiner’s indexes and to access them,

we need these keys to be generated first.

To avoid these issues, do not assume that subsequent joiners' mapping functions only apply after the previous joiners have matched. Alternatively (and possibly at the cost of reduced performance) use the filtering joiner, which is processed differently and does not suffer from this issue:

-

Java

factory.forEach(VehicleShift.class)

.join(Visit.class,

Joiners.equal(Function.identity(), Visit::getVehicleShift), // Visit's VehicleShift is not null...

Joiners.filtering((vehicleShift, visit) ->

vehicleShift.getMaxTravelTime() < visit.getVehicleShift().getMaxTravelTime()

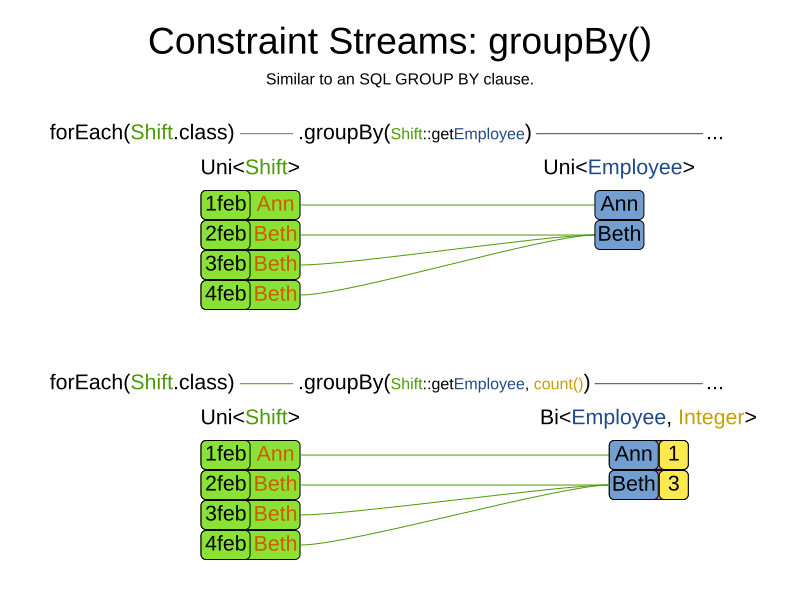

))4.5. Grouping and collectors

Grouping collects items in a stream according to user-provider criteria (also called "group key"), similar to what a

GROUP BY SQL clause does. Additionally, some grouping operations also accept one or more Collector instances, which

provide various aggregation functions. The following figure illustrates a simple groupBy() operation:

|

Objects used as group key must obey the general contract of For this reason, it is not recommended to use mutable objects (especially mutable collections) as group keys. If planning entities are used as group keys, their hashCode must not be computed off of planning variables. Failure to follow this recommendation may result in runtime exceptions being thrown. |

For example, the following code snippet first groups all visits by the vehicle that will be used,

counts them using the ConstraintCollectors.count(…) collector,

and finally penalizes every vehicle which makes more than 10 visits:

-

Java

import static ai.timefold.solver.core.api.score.stream.ConstraintCollectors.*;

...

private Constraint tooManyVisits(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Visit.class)

.groupBy(Visit::getVehicle, count())

.filter((vehicle, visitCount) -> visitCount > 10)

.penalize(HardSoftScore.ONE_HARD,

(vehicle, visitCount) -> visitCount - 10)

.asConstraint("tooManyVisits");

}|

Information might be lost during grouping.

In the previous example,

|

There are many collectors available out of the box.

You can also provide your own collectors by implementing the

ai.timefold.solver.core.api.score.stream.uni.UniConstraintCollector interface,

or its Bi…, Tri… and Quad… counterparts.

4.5.1. count() collector

The ConstraintCollectors.count(…) counts all elements per group. For example, the following use of the collector

gives a number of items for two separate groups - one where the talks have unavailable speakers, and one where they

don’t.

-

Java

private Constraint speakerAvailability(ConstraintFactory factory) {

return factory.forEach(Talk.class)

.groupBy(Talk::hasAnyUnavailableSpeaker, count())

.penalize(HardSoftScore.ONE_HARD,

(hasUnavailableSpeaker, count) -> ...)

.asConstraint("speakerAvailability");

}The count is collected in an int. Variants of this collector:

-

countLong()collects alongvalue instead of anintvalue.

To count a bi, tri or quad stream, use countBi(), countTri() or countQuad() respectively,

because - unlike the other built-in collectors - they aren’t overloaded methods due to Java’s generics erasure.

4.5.2. countDistinct() collector

The ConstraintCollectors.countDistinct(…) counts any element per group once, regardless of how many times it

occurs. For example, the following use of the collector gives a number of talks in each unique room.

-

Java

private Constraint roomCount(ConstraintFactory factory) {

return factory.forEach(Talk.class)

.groupBy(Talk::getRoom, countDistinct())

.penalize(HardSoftScore.ONE_SOFT,

(room, count) -> ...)

.asConstraint("roomCount");

}The distinct count is collected in an int. Variants of this collector:

-

countDistinctLong()collects alongvalue instead of anintvalue.

4.5.3. sum() collector

To sum the values of a particular property of all elements per group,

use the ConstraintCollectors.sum(…) collector.

The following code snippet first groups all visits by the vehicle that will be used,

and sums up all the service durations using the ConstraintCollectors.sum(…) collector.

-

Java

private Constraint totalServiceDuration(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Visit.class)

.groupBy(Visit::getVehicle, sum(Visit::getServiceDuration))

.penalize(HardSoftScore.ONE_SOFT,

(vehicle, totalServiceDuration) -> totalServiceDuration)

.asConstraint("totalServiceDuration");

}The sum is collected in an int. Variants of this collector:

-

sumLong()collects alongvalue instead of anintvalue. -

sumBigDecimal()collects ajava.math.BigDecimalvalue instead of anintvalue. -

sumBigInteger()collects ajava.math.BigIntegervalue instead of anintvalue. -

sumDuration()collects ajava.time.Durationvalue instead of anintvalue. -

sumPeriod()collects ajava.time.Periodvalue instead of anintvalue. -

a generic

sum()variant for summing up custom types

4.5.4. average() collector

To calculate the average of a particular property of all elements per group, use the ConstraintCollectors.average(…)

collector.

The following code snippet first groups all visits by the vehicle that will be used,

and averages all the service durations using the ConstraintCollectors.average(…) collector.

-

Java

private Constraint averageServiceDuration(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Visit.class)

.groupBy(Visit::getVehicle, average(Visit::getServiceDuration))

.penalize(HardSoftScore.ONE_SOFT,

(vehicle, averageServiceDuration) -> (int) averageServiceDuration)

.asConstraint("averageServiceDuration");

}The average is collected as a double, and the average of no elements is null.

Variants of this collector:

-

averageLong()collects alongvalue instead of anintvalue. -

averageBigDecimal()collects ajava.math.BigDecimalvalue instead of anintvalue, resulting in aBigDecimalaverage. -

averageBigInteger()collects ajava.math.BigIntegervalue instead of anintvalue, resulting in aBigDecimalaverage. -

averageDuration()collects ajava.time.Durationvalue instead of anintvalue, resulting in aDurationaverage.

4.5.5. min() and max() collectors

To extract the minimum or maximum per group, use the ConstraintCollectors.min(…) and

ConstraintCollectors.max(…) collectors respectively.

These collectors operate on values of properties which are Comparable (such as Integer, String or Duration),

although there are also variants of these collectors which allow you to provide your own Comparator.

The following code snippet first groups all visits by the vehicle that will be used,

and finds the maximum in the group using the ConstraintCollectors.max(…) collector.

-

Java

private Constraint maxServiceDuration(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Visit.class)

.groupBy(Visit::getVehicle, max(Visit::getServiceDuration))

.penalize(HardSoftScore.ONE_SOFT,

(vehicle, maxServiceDuration) -> maxServiceDuration)

.asConstraint("maxServiceDuration");

}|

|

4.5.6. toList(), toSet() and toMap() collectors

To extract all elements per group into a collection, use the ConstraintCollectors.toList(…).

The following example retrieves all visits for a given vehicle in a List:

-

Java

private Constraint vehicleAndItsVisits(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Visit.class)

.groupBy(Visit::getVehicle, toList())

.penalize(HardSoftScore.ONE_HARD,

(vehicle, visitList) -> ...)

.asConstraint("vehicleAndItsVisits");

}Variants of this collector:

-

toList()collects aListvalue. -

toSet()collects aSetvalue. -

toSortedSet()collects aSortedSetvalue. -

toMap()collects aMapvalue. -

toSortedMap()collects aSortedMapvalue.

|

The iteration order of elements in the resulting collection is not guaranteed to be stable,

unless it is a sorted collector such as |

|

Collecting elements into a |

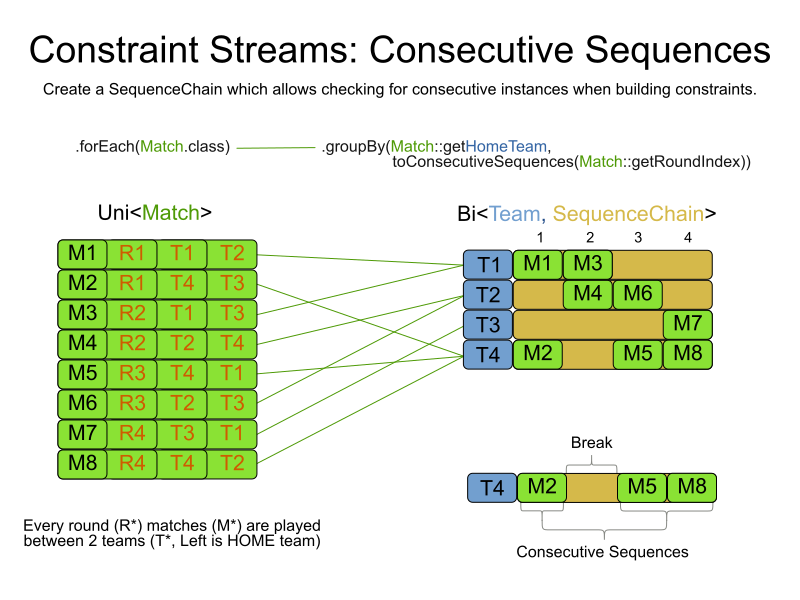

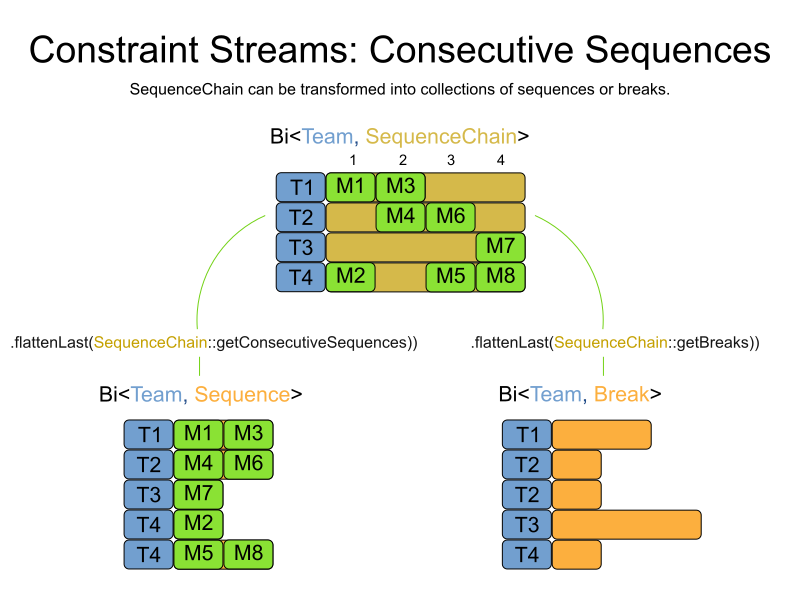

4.5.7. Consecutive collectors

Certain constraints, such as maximum consecutive working days for an employee

or the number of matches played at home in a sports league,

require ordering those shifts or matches in sequences and penalizing sequences exceeding a certain threshold.

If those sequences can be of arbitrary length unknown at the time of writing the constraints,

you can implement this pattern using the ConstraintCollectors.toConsecutiveSequences(…) collector:

-

Java

Constraint multipleConsecutiveHomeMatches(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Match.class)

.groupBy(match -> Match::getHomeTeam,

ConstraintCollectors.toConsecutiveSequences(Match::getRoundId))

.flattenLast(SequenceChain::getConsecutiveSequences)

.filter((team, matches) -> matches.getCount() >= MAX_CONSECUTIVE_MATCHES)

.penalize(HardSoftScore.ONE_HARD, (team, matches) -> matches.getCount())

.asConstraint("4 or more consecutive home matches");

}

Let’s take a closer look at the crucial part of this constraint:

-

Java

.groupBy(match -> Match::getHomeTeam,

ConstraintCollectors.toConsecutiveSequences(Match::getRoundId))

.flattenLast(SequenceChain::getConsecutiveSequences)

.filter((team, matches) -> matches.getCount() >= MAX_CONSECUTIVE_MATCHES)The groupBy() building block groups all matches by team,

and for every such pair it creates a SequenceChain instance.

The matches will be put into sequence by round date,

and the round index will be used to identify consecutive sequences and breaks between them.

Imagine the collector putting each match on a number line,

where the number is the round day index of the match.

The SequenceChain then has several useful methods to not only get the list of matches,

but also to get any and all breaks between those matches.

In this case, we are only interested in the sequences of matches themselves,

and we use flattening to convert each to its own tuple.

Finally, if a league requires that a team can play at most 5 consecutive matches (MAX_CONSECUTIVE_MATCHES) in home,

and matches contains 6 matches, then the weight penalty will be 6.

If the number of consecutive matches is 4,

then the sequence is not violating the league requirement, and we can filter it out.

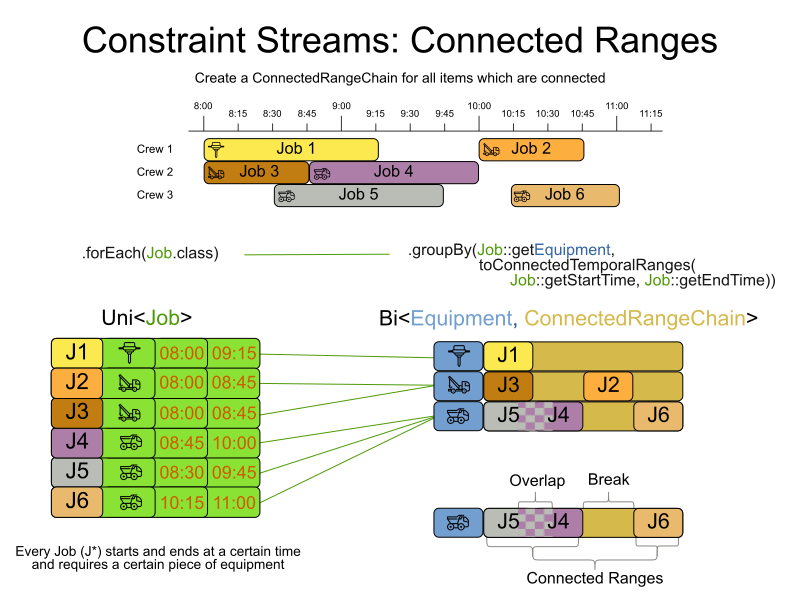

4.5.8. Connected ranges collectors

Certain constraints require tracking properties of connected ranges.

For instance, to ensure equipment allocated to several timeslots across the same connected range is not allocated over its capacity.

This can be achieved using the ConstraintCollectors.toConnectedTemporalRanges(…) or ConstraintCollectors.toConnectedRanges(…) (for non-temporal cases) collectors:

-

Java

Constraint doNotOverAssignEquipment(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Job.class)

.groupBy(Job::getEquipment,

ConstraintCollectors.toConnectedTemporalRanges(

Job::getStartTime,

Job::getEndTime)

)

.flattenLast(ConnectedRangeChain::getConnectedRanges)

.filter((equipment, connectedRange) -> connectedRange.getMaximumOverlap() > equipment.getCapacity())

.penalize(HardSoftScore.ONE_HARD, (equipment, connectedRange) -> connectedRange.getMaximumOverlap() - equipment.getCapacity())

.asConstraint("Concurrent equipment usage over capacity");

}

Let’s take a closer look at the crucial part of this constraint:

-

Java

.groupBy(Job::getEquipment,

ConstraintCollectors.toConnectedTemporalRanges(

Job::getStartTime,

Job::getEndTime

)

)

.flattenLast(ConnectedRangeChain::getConnectedRanges)

.filter((equipment, connectedRange) -> connectedRange.getMaximumOverlap() > equipment.getCapacity())The groupBy() building block groups all jobs by required equipment, and for every such pair it creates a ConnectedRangeChain instance.

Any overlapping jobs will be put into the same ConnectedRange cluster.

The ConnectedRangeChain then has several useful methods to not only get the list of resource usage, but also to get any and all gaps between resource usage.

In this case, we are only interested in the resource usage, and we use flattening to convert each to its own tuple.

Finally, we use mapping to calculate the violation amount for each concurrent usage.

For example, if the equipment has a capacity of 3, and the maximumOverlap of the resource is 5, then violationAmount will be 2.

If the amount is 0, then the equipment is not being used over its capacity and we can filter the tuple out.

In case no temporal data is available, it’s still possible to create connected ranges using the ConstraintCollectors.toConnectedRanges(…) collector.

In this case the start and end of a range needs to be provided as well as a function to calculate the difference between the two.

-

Java

.groupBy(Job::getEquipment,

ConstraintCollectors.toConnectedRanges(

Job::getStart,

Job::getEnd,

(a, b) -> b - a // difference calculator

)

)4.5.9. Load balancing collectors

Certain constraints require resource usage to be balanced across the solution.

For example, employees should have a similar number of shifts.

This can be achieved using the ConstraintCollectors.loadBalance(…) collector.

For more, see Load balancing and fairness.

4.5.10. Conditional collectors

The constraint collector framework enables you to create constraint collectors which will only collect in certain circumstances.

This is achieved using the ConstraintCollectors.conditionally(…) constraint collector.

This collector accepts a predicate, and another collector to which it will delegate if the predicate is true. The following example returns a count of long-running visits with assigned to a given vehicle, where the customer asked for evening delivery:

-

Java

private Constraint visitWithEveningDelivery(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Visit.class)

.groupBy(Visit::getVehicle, conditionally(

Visit::isEveningDelivery,

count()

))

.penalize(HardSoftScore.ONE_HARD,

(vehicle, eveningDeliveryCount) -> ...)

.asConstraint("visitWithEveningDelivery");

}This is useful in situations where multiple collectors are used and only some of them need to be restricted.

If all of them needed to be restricted in the same way,

then applying a filter() before the grouping is preferable.

4.5.11. Composing collectors

The constraint collector framework enables you to create complex collectors utilizing simpler ones.

This is achieved using the ConstraintCollectors.compose(…) constraint collector.

This collector accepts 2 to 4 other constraint collectors,

and a function to merge their results into one.

The following example builds an average() constraint collector

using the count constraint collector and sum() constraint collector:

-

Java

<A> UniConstraintCollector<A, ?, Double>

average(ToIntFunction<A> groupValueMapping) {

return compose(count(), sum(groupValueMapping), (count, sum) -> {

if (count == 0) {

return null;

} else {

return sum / (double) count;

}

});

}Similarly, the compose() collector enables you to work around the limitation of Constraint Stream cardinality

and use as many as 4 collectors in your groupBy() statements:

-

Java

UniConstraintCollector<A, ?, Triple<Integer, Integer, Integer>> collector =

compose(count(),

min(),

max(),

(count, min, max) -> Triple.of(count, min, max));Such a composite collector returns a Triple instance which allows you to access

each of the sub collectors individually.

|

Timefold Solver does not provide any |

4.5.12. Adjusting results

In some cases, you may want to adjust the result that a constraint collector produces.

Consider the average() constraint collector:

ConstraintCollectors.average(...)This collector returns double values, which may not be suitable for your use case.

For example, you may want to round the result to the nearest integer.

To do this, you can use the ConstraintCollectors.collectAndThen(…) collector:

-

Java

ConstraintCollectors.collectAndThen(

ConstraintCollectors.average(...),

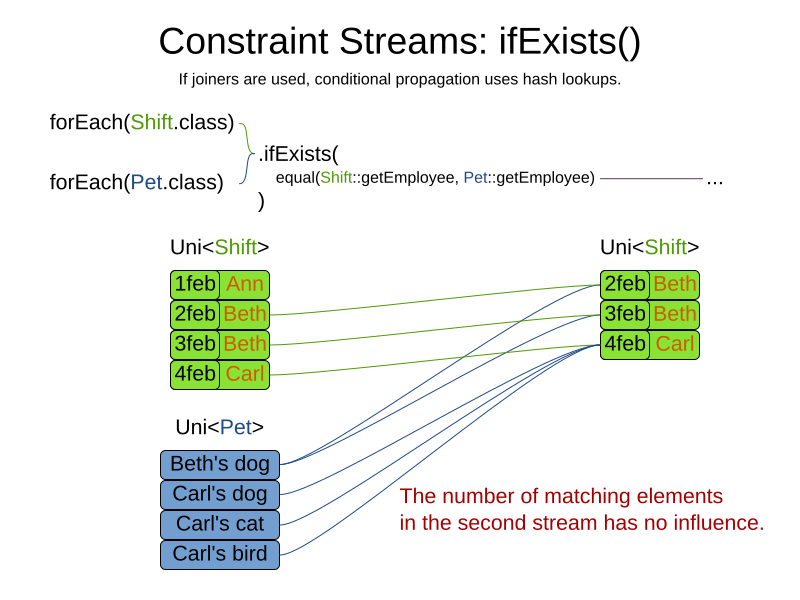

doubleValue -> (int) Math.round(doubleValue))4.6. Conditional propagation

Conditional propagation enables you to exclude constraint matches from the constraint stream based on the presence or absence of some other object.

The following example penalizes vehicles which have at least one visit:

-

Java

private Constraint deployedVehicle(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Vehicle.class)

.ifExists(Visit.class, Joiners.equal(Function.identity(), Visit::getVehicle))

.penalize(HardSoftScore.ONE_SOFT,

vehicle -> ...)

.asConstraint("deployedVehicle");

}Note the use of the ifExists() building block.

On UniConstraintStream, the ifExistsOther() building block is also available which is useful in situations where the

forEach() constraint match type is the same as the ifExists() type.

Conversely, if the ifNotExists() building block is used (as well as the ifNotExistsOther() building block on

UniConstraintStream) you can achieve the opposite effect:

-

Java

private Constraint idleVehicle(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Vehicle.class)

.ifNotExists(Visit.class, Joiners.equal(Function.identity(), Visit::getVehicle))

.penalize(HardSoftScore.ONE_SOFT,

vehicle -> ...)

.asConstraint("idleVehicle");

}Here, only the vehicles without any visits are penalized.

Also note the use of the Joiner class to limit the constraint matches.

For a description of available joiners, see joining.

Conditional propagation operates much like joining,

but it does not increase stream cardinality.

Matches from these building blocks are not available further down the stream.

|

For performance reasons, using conditional propagation with the appropriate |

4.7. Mapping tuples

Mapping enables you to transform each tuple in a constraint stream by applying a mapping function to it. The result of such mapping is another constraint stream of the mapped tuples.

-

Java

private Constraint visits(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Visit.class) // UniConstraintStream<Visit>

.map(Visit::getName) // UniConstraintStream<String>

...

}|

In the example above, the mapping function produces duplicate tuples if two different |

Mapping can be used to transform streams of all cardinalities,

and even between cardinalities.

The following example maps a pair of Visit instances to a pair of their names:

-

Java

private Constraint visits(ConstraintFactory constraintFactory) {

return constraintFactory.forEachUniquePair(Visit.class) // BiConstraintStream<Visit, Visit>

.map((visit1, visit2) -> Pair.of(visit1.getName(), visit2.getName())) // UniConstraintStream<Pair<String, String>>

...

}4.7.1. Designing the mapping function

When designing the mapping function, follow these guidelines for optimal performance:

-

Keep the function pure. The mapping function should only depend on its input. That is, given the same input, it always returns the same output.

-

Keep the function bijective. No two input tuples should map to the same output tuple, or to tuples that are equal. Not following this recommendation creates a constraint stream with duplicate tuples, and may force you to use

distinct()later. -

Use immutable data carriers. The tuples returned by the mapping function should be immutable and identified by their contents and nothing else. If two tuples carry objects which equal one another, those two tuples should likewise equal and preferably be the same instance.

4.7.2. Dealing with duplicate tuples using distinct()

As a general rule, tuples in constraint streams are distinct. That is, no two tuples that equal one another. However, certain operations such as tuple mapping may produce constraint streams where that is not true.

If a constraint stream produces duplicate tuples, you can use the distinct() building block

to have the duplicate copies eliminated.

-

Java

private Constraint visits(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Visit.class) // UniConstraintStream<Visit>

.map(Visit::getName) // UniConstraintStream<String>

.distinct() // The same, each name just once.

...

}|

There is a performance cost to |

4.7.3. Expanding tuples

Tuple expansion is a special case of tuple mapping which only increases stream cardinality and can not introduce duplicate tuples. It enables you to add extra facts to each tuple in a constraint stream by applying a mapping function to it. This is useful in situations where an expensive computations needs to be cached for use later in the stream.

In the following example,

the method Talk.prevailingSpeakerUndesiredTimeslotTagCount() internally iterates over collections to find overlapping tags

and returns the number of such tags.

It is expensive and it is called for each Talk in the stream,

possibly being called many thousands of times per second.

Importantly, it is first called to filter out talks that have zero overlap,

and then again to penalize overlap on talks which suffer from it.

-

Java

Constraint speakerUndesiredTimeslotTags(ConstraintFactory factory) {

return factory.forEach(Talk.class)

.filter(talk -> talk.prevailingSpeakerUndesiredTimeslotTagCount() > 0)

.penalize(HardSoftScore.ONE_SOFT, talk -> talk.prevailingSpeakerUndesiredTimeslotTagCount() * talk.getDurationInMinutes())

.asConstraint(SPEAKER_UNDESIRED_TIMESLOT_TAGS);

}We can improve this by using tuple expansion to cache the result of the expensive computation, possibly significantly reducing the number of times it is called.

-

Java

Constraint speakerUndesiredTimeslotTags(ConstraintFactory factory) {

return factory.forEach(Talk.class)

.expand(Talk::prevailingSpeakerUndesiredTimeslotTagCount)

.filter((talk, undesiredTagCount) -> undesiredTagCount > 0)

.penalize(HardSoftScore.ONE_SOFT, (talk, undesiredTagCount) -> undesiredTagCount * talk.getDurationInMinutes())

.asConstraint(SPEAKER_UNDESIRED_TIMESLOT_TAGS);

}Once the tuple for a Talk has been created and passed through the filter,

the expensive computation will not be reevaluated again unless the Talk itself changes.

|

There is a performance cost to |

4.8. Flattening

Flattening enables you to transform any Java Iterable (such as List or Set)

into a set of tuples, which are sent downstream.

(Similar to Java Stream’s flatMap(…))

This is done by applying a mapping function to the final element in the source tuple.

-

Java

private Constraint requiredJobRoles(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Person.class) // UniConstraintStream<Person>

.join(Job.class,

equal(Function.identity(), Job::getAssignee)) // BiConstraintStream<Person, Job>

.flattenLast(Job::getRequiredRoles) // BiConstraintStream<Person, Role>

.filter((person, requiredRole) -> ...)

...

}|

In the example above, the mapping function produces duplicate tuples

if |

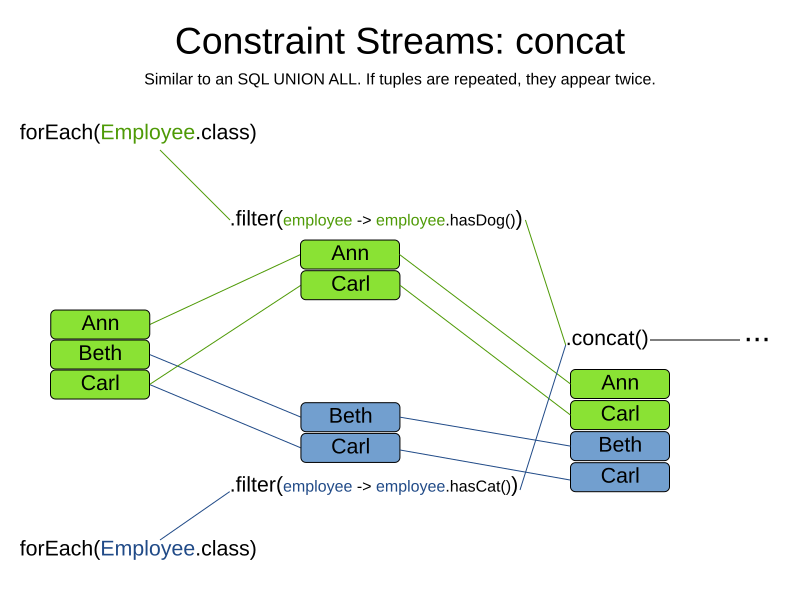

4.9. Concatenation

The concat building block allows you to create a constraint stream containing tuples

of two other constraint streams.

If join acts like a cartesian product of two lists,

concat acts like a concatenation of two lists.

Unlike union of sets, concatenation of lists repeats duplicated elements.

If the two constraint concatenating streams share tuples, which happens eg.

when they come from the same source of data,

the tuples will be repeated downstream.

If this is undesired, use the distinct building block.

For example, to ensure each employee has a minimum number of assigned shifts:

-

Java

private Constraint ensureEachEmployeeHasAtLeastTwoShifts(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Employee.class)

.join(Shift.class, equal(Function.identity(), Shift::getEmployee))

.concat(

constraintFactory.forEach(Employee.class)

.ifNotExists(Shift.class, equal(Function.identity(), Shift::getEmployee))

)

.groupBy((employee, shift) -> employee,

conditionally((employee, shift) -> shift != null,

countBi())

)

.filter((employee, shiftCount) -> shiftCount < employee.minimumAssignedShifts)

.penalize(HardSoftScore.ONE_SOFT, (employee, shiftCount) -> employee.minimumAssignedShifts - shiftCount)

.asConstraint("Minimum number of assigned shifts");

}This correctly counts the number of shifts each Employee has, even when the Employee has no shifts.

|

Complementing a stream shares many of the same use cases as |

Consider the following naive implementation without concat:

-

Java

private Constraint incorrectEnsureEachEmployeeHasAtLeastTwoShifts(ConstraintFactory constraintFactory) {

return constraintFactory.forEach(Employee.class)

.join(Shift.class, equal(Function.identity(), Shift::getEmployee))

.groupBy((employee, shift) -> employee,

countBi())

)

.filter((employee, shiftCount) -> shiftCount < employee.minimumAssignedShifts)

.penalize(HardSoftScore.ONE_SOFT, (employee, shiftCount) -> employee.minimumAssignedShifts - shiftCount)

.asConstraint("Minimum number of assigned shifts (incorrect)");

}An employee with no assigned shifts wouldn’t have been penalized

because no tuples were passed to the groupBy building block.

4.9.1. Padding values

Consider two constraint streams of different cardinalities, for instance:

-

Java

// All employees.

UniConstraintStream<Employee> uniStream = constraintFactory.forEach(Employee.class);

// Employees with a count of their shifts; some employees may have no shifts, therefore are not included.

BiConstraintStream<Employee, Integer> biStream = constraintFactory.forEach(Employee.class)

.join(Shift.class, equal(Function.identity(), Shift::getEmployee))

.groupBy((employee, shift) -> employee, countBi());By default, when concatenating these streams,

the stream of lower cardinality will be padded with null values:

-

Java

// Employees from uniStream will have null as their shift count.

BiConstraintStream<Employee, Integer> concatStream = uniStream.concat(biStream);Instead of using null default,

you can specify a padding value to use when padding the lower cardinality stream:

-

Java

// Employees from uniStream will have a zero as their shift count.

BiConstraintStream<Employee, Integer> concatStream = uniStream.concat(biStream, employee -> 0);4.10. Complementing a stream

The join building block provided by Constraint Streams is technically an inner join.

This means only the matches that are present in both streams are considered.

For example, if you have a stream of Employee instances and a stream of Shift instances,

joining Shift instances with Employee instances will only return shifts that are assigned to an employee.

Employees without shifts will not be considered.

In cases such as load balancing, a similar situation happens where the Shift instances only reference the Employee instances assigned to them.

For a properly balanced solution, you also need to include Employee instances that are not assigned to any shifts.

This is where you can use the complement block as shown in the following example:

-

Java

factory.forEach(Shift.class)

.groupBy(Shift::getEmployee, count())

.complement(Employee.class, employee -> 0)

.groupBy(loadBalance(...))After the groupBy building block,

the stream contains only employees with at least one shift assigned,

and each carries a count of shifts assigned.

The type of the stream is BiConstraintStream<Employee, Integer>.

To add all the Employee instances that do not have any shifts assigned,

the complement building block is used.

It looks at all the Employee instances already in the stream,

and adds all known Employee instances that are not present to the stream.

For each of them, it assigns a value of 0 as the number of shifts assigned to that employee.

The type of the stream is still BiConstraintStream<Employee, Integer>.

This stream now contains all Employee instances, both with and without shifts assigned,

and can be passed to the load balancing constraint collector,

which will compute the unfairness metric.

4.11. Precomputing a stream

When a stream depends only on properties of problem facts or entities,

and not on any planning variables (genuine or shadow),

that stream can be precomputed to improve performance.

Consider the following No overlapping shifts constraint:

-

Java

Constraint noOverlappingShifts(ConstraintFactory constraintFactory) {

return constraintFactory.forEachUniquePair(Shift.class,

Joiners.overlapping(Shift::getStart, Shift::getEnd),

Joiners.equal(Shift::getEmployee))

.penalize(HardSoftScore.ONE_HARD, (shift1, shift2) -> shift1.getOverlap(shift2))

.asConstraint("No overlapping shifts");

}Whenever any variable changes, this constraint will recalculate what shifts overlap and their overlap amount.

Since start and end are not variables (genuine or shadow) and getOverlap() does not depend on any variables,

some parts of the constraint can be precomputed to improve performance:

-

Java

TriConstraintStream<Shift, Shift, Integer> overlappingShifts(PrecomputeFactory precomputeFactory) {

return precomputeFactory.forEachUnfilteredUniquePair(Shift.class,

Joiners.overlapping(Shift::getStart, Shift::getEnd))

.expand((shift1, shift2) -> shift1.getOverlap(shift2));

}

Constraint noOverlappingShifts(ConstraintFactory constraintFactory) {

return constraintFactory.precompute(this::overlappingShifts)

.filter((shift1, shift2, overlap) ->

shift1.getEmployee() != null &&

shift1.getEmployee() == shift2.getEmployee())

.penalize(HardSoftScore.ONE_HARD, (shift1, shift2, overlap) -> overlap)

.asConstraint("No overlapping shifts");

}This will precompute and store the overlapping shifts, and their amount of overlap. When any of the overlapping shifts' variables change, only the filter will be re-evaluated. That filter also won’t be evaluated for non-overlapping shifts, since they were already eliminated by the precomputed stream.

4.11.1. Precomputing and planning variables

Precomputed streams are unfiltered and will include both unassigned and inconsistent planning entities.

If only assigned entities are desired,

add a filter after the precompute call to check if the entities' variables are assigned.

|

A score corruption will occur if a precomputed stream depends on variables (genuine or shadow).

For example, if

|

4.11.2. When to use precomputing

Constraint streams are already very efficient, so precomputing them may result in little change. It can be useful in cases where the constraint stream needs to execute expensive operations, such as filters which look up data in large collections or which compute complex calculations. It also helps with large joins where the join condition doesn’t depend on any variables.

However, only the biggest of datasets typically struggle with these issues. Always measure move evaluation speed before and after implementing precomputing to determine if using it brings benefits for your use case.

5. Testing a constraint stream

We recommend that you test your constraints to ensure that they behave as expected.

Constraint streams include the Constraint Verifier unit testing harness.

To use it, first add a test scoped dependency to the timefold-solver-test JAR.

5.1. Testing constraints in isolation

Consider the following constraint stream:

-

Java

Constraint vehicleCapacity(ConstraintFactory factory) {

return factory.forEach(Vehicle.class)

.filter(vehicle -> vehicle.getTotalDemand() > vehicle.getCapacity())

.penalizeLong(HardSoftLongScore.ONE_HARD,

vehicle -> vehicle.getTotalDemand() - vehicle.getCapacity())

.asConstraint(VEHICLE_CAPACITY);

}The following example uses the Constraint Verifier API to create a simple unit test for the preceding constraint stream:

-

Java

private ConstraintVerifier<VehicleRoutingConstraintProvider, VehicleRoutePlan> constraintVerifier

= ConstraintVerifier.build(new VehicleRoutingConstraintProvider(), VehicleRoutePlan.class, Vehicle.class, Visit.class);

@Test

void vehicleCapacity() {

LocalDateTime tomorrow_07_00 = LocalDateTime.of(TOMORROW, LocalTime.of(7, 0));

LocalDateTime tomorrow_08_00 = LocalDateTime.of(TOMORROW, LocalTime.of(8, 0));

LocalDateTime tomorrow_10_00 = LocalDateTime.of(TOMORROW, LocalTime.of(10, 0));

Vehicle vehicleA = new Vehicle("1", 100, LOCATION_1, tomorrow_07_00);

Visit visit1 = new Visit("2", "John", LOCATION_2, 80, tomorrow_08_00, tomorrow_10_00, Duration.ofMinutes(30L));

vehicleA.getVisits().add(visit1);

Visit visit2 = new Visit("3", "Paul", LOCATION_3, 40, tomorrow_08_00, tomorrow_10_00, Duration.ofMinutes(30L));

vehicleA.getVisits().add(visit2);

constraintVerifier.verifyThat(VehicleRoutingConstraintProvider::vehicleCapacity)

.given(vehicleA, visit1, visit2)

.penalizesBy(20);

}This test ensures that, if a vehicle capacity is exceeded, that the constraint penalizes accordingly.

The following line creates a shared ConstraintVerifier instance and initializes the instance with the

VehicleRoutingConstraintProvider:

-

Java

private ConstraintVerifier<VehicleRoutingConstraintProvider, VehicleRoutePlan> constraintVerifier

= ConstraintVerifier.build(new VehicleRoutingConstraintProvider(), VehicleRoutePlan.class, Vehicle.class, Visit.class);The @Test annotation indicates that the method is a unit test in a testing framework of your choice.

Constraint Verifier works with testing frameworks popular in your ecosystem, including JUnit.

The first part of the test prepares the test data. In this case, the test data includes a vehicle and a visit:

-

Java

Vehicle vehicleA = new Vehicle("1", 100, LOCATION_1, tomorrow_07_00);

Visit visit1 = new Visit("2", "John", LOCATION_2, 80, tomorrow_08_00, tomorrow_10_00, Duration.ofMinutes(30L));

vehicleA.getVisits().add(visit1);

Visit visit2 = new Visit("3", "Paul", LOCATION_3, 40, tomorrow_08_00, tomorrow_10_00, Duration.ofMinutes(30L));

vehicleA.getVisits().add(visit2);Further down, the following code tests the constraint:

-

Java

constraintVerifier.verifyThat(VehicleRoutingConstraintProvider::vehicleCapacity)

.given(vehicleA, visit1, visit2)

.penalizesBy(20);The verifyThat(…) call is used to specify a method on the VehicleRoutingConstraintProvider class which is under test.

This method must be visible to the test class, which the Java compiler enforces.

The given(…) call is used to enumerate all the facts that the constraint stream operates on.

In this case, the given(…) call takes the vehicleA, visit1 and visit2 instances previously created.

Alternatively, you can use a givenSolution(…) method here and provide a planning solution instead.

It’s important to understand

that calling givenSolution(…) does not update any shadow variables.

This is the default behavior and can be modified by invoking settingAllShadowVariables().

-

Java

constraintVerifier.verifyThat(VehicleRoutingConstraintProvider::vehicleCapacity)

.givenSolution(solution)

.settingAllShadowVariables()

.penalizesBy(20);|

You can also use methods |

Finally, the penalizesBy(…) call completes the test,

making sure that the given constraint under the given conditions penalizes by the 20.

This number is a product of multiplying the match weight, as defined in the constraint stream, by the number of matches.

Alternatively, you can use a rewardsWith(…) call to check for rewards instead of penalties.

The method to use here depends on whether the constraint stream in question is terminated with a penalize or a

reward building block.

|

When executing |

5.2. Testing all constraints together

In addition to testing individual constraints, you can test the entire ConstraintProvider instance.

Consider the following test:

-

Java

@Test

public void givenFactsMultipleConstraints() {

LocalDateTime tomorrow_07_00 = LocalDateTime.of(TOMORROW, LocalTime.of(7, 0));

LocalDateTime tomorrow_08_00 = LocalDateTime.of(TOMORROW, LocalTime.of(8, 0));

LocalDateTime tomorrow_10_00 = LocalDateTime.of(TOMORROW, LocalTime.of(10, 0));

Vehicle vehicleA = new Vehicle("1", 100, LOCATION_1, tomorrow_07_00);

Visit visit1 = new Visit("2", "John", LOCATION_2, 80, tomorrow_08_00, tomorrow_10_00, Duration.ofMinutes(30L));

vehicleA.getVisits().add(visit1);

Visit visit2 = new Visit("3", "Paul", LOCATION_3, 40, tomorrow_08_00, tomorrow_10_00, Duration.ofMinutes(30L));

vehicleA.getVisits().add(visit2);

constraintVerifier.verifyThat()

.given(vehicleA, visit1, visit2)

.scores(HardSoftScore.ofSoft(20));

}There are only two notable differences to the previous example.

-

The

verifyThat()call takes no argument here, signifying that the entireConstraintProviderinstance is being tested. (But for the sake of simplicity, we will assume it still only contains the one constraint we’ve seen previously.) -

Instead of either a

penalizesBy()orrewardsWith()call, thescores(…)method is used. This runs theConstraintProvideron the given facts and returns a sum ofScores of all constraint matches resulting from the given facts.

Using this method, you ensure that the constraint provider does not miss any constraints and that the scoring function

remains consistent as your code base evolves.

It is therefore necessary for the given(…) method to list all planning entities and problem facts,

or provide the entire planning solution instead.

To test all constraints while updating all shadow variables,

call giveSolution(…) and settingAllShadowVariables().

This approach ensures that the shadow variable listeners are triggered,

and the constraints that depend on the related variables are evaluated accordingly.

-

Java

constraintVerifier.verifyThat()

.givenSolution(solution)

.settingAllShadowVariables()

.scores(HardSoftScore.ofSoft(20));|

|

6. Other types of score calculation

Timefold Solver supports two other types of score calculation.

6.1. Easy score calculation

An easy way to implement your score calculation as imperative code:

-

Advantages:

-

No learning curve; use the language you’re already familiar with.

-

Opportunity to delegate score calculation to an existing code base or legacy system.

-

Useful for prototyping.

-

-

Disadvantages:

-

Slower, typically not suitable for production.

-

Does not scale because there is no incremental score calculation.

-

Can not explain the score.

-

To start using an Easy score calculator, implement the one method of the interface EasyScoreCalculator:

-

Java

public interface EasyScoreCalculator<Solution_, Score_ extends Score<Score_>> {

Score_ calculateScore(Solution_ solution);

}Configure it in the solver configuration:

<scoreDirectorFactory>

<easyScoreCalculatorClass>...MyEasyScoreCalculator</easyScoreCalculatorClass>

</scoreDirectorFactory>To configure values of an EasyScoreCalculator dynamically in the solver configuration

(so the Benchmarker can tweak those parameters),

add the easyScoreCalculatorCustomProperties element and use custom properties:

<scoreDirectorFactory>

<easyScoreCalculatorClass>...MyEasyScoreCalculator</easyScoreCalculatorClass>

<easyScoreCalculatorCustomProperties>

<property name="myCacheSize" value="1000" />

</easyScoreCalculatorCustomProperties>

</scoreDirectorFactory>6.2. Incremental score calculation

A way to implement your score calculation incrementally in a supported programming language.

-

Advantages:

-

Very fast and scalable; currently the fastest if implemented correctly.

-

-

Disadvantages:

-

Hard to write

-

A scalable implementation heavily uses maps, indexes etc. You have to learn, design, write and improve all these performance optimizations yourself. Why not have constraint streams do the hard work for you?

-

-

Hard to read

-

Regular score constraint changes can lead to high maintenance costs.

-

-

To start using an Incremental score calculator,

implement all the methods of the interface IncrementalScoreCalculator:

-

Java

public interface IncrementalScoreCalculator<Solution_, Score_ extends Score<Score_>> {

void resetWorkingSolution(Solution_ workingSolution);

void beforeEntityAdded(Object entity);

void afterEntityAdded(Object entity);

void beforeVariableChanged(Object entity, String variableName);

void afterVariableChanged(Object entity, String variableName);

void beforeEntityRemoved(Object entity);

void afterEntityRemoved(Object entity);

Score_ calculateScore();

}

Configure it in the solver configuration:

<scoreDirectorFactory>

<incrementalScoreCalculatorClass>...MyIncrementalScoreCalculator</incrementalScoreCalculatorClass>

</scoreDirectorFactory>|

Incremental score calculator code can be difficult to write and to review.

Assert its correctness by using an |

To configure values of an IncrementalScoreCalculator dynamically in the solver configuration

(so the Benchmarker can tweak those parameters),

add the incrementalScoreCalculatorCustomProperties element and use custom properties:

<scoreDirectorFactory>

<incrementalScoreCalculatorClass>...MyIncrementalScoreCalculator</incrementalScoreCalculatorClass>

<incrementalScoreCalculatorCustomProperties>

<property name="myCacheSize" value="1000"/>

</incrementalScoreCalculatorCustomProperties>

</scoreDirectorFactory>6.2.1. ConstraintMatchAwareIncrementalScoreCalculator

To add support for score analysis,

optionally also implement the ConstraintMatchAwareIncrementalScoreCalculator interface:

public interface ConstraintMatchAwareIncrementalScoreCalculator<Solution_, Score_ extends Score<Score_>> {

void resetWorkingSolution(Solution_ workingSolution, boolean constraintMatchEnabled);

Collection<ConstraintMatchTotal<Score_>> getConstraintMatchTotals();

Map<Object, Indictment<Score_>> getIndictmentMap();

}Typically it means creating one ConstraintMatchTotal per constraint type

and calling addConstraintMatch() for each constraint match.

getConstraintMatchTotals() code often duplicates some logic of the normal IncrementalScoreCalculator methods.

Constraint Streams doesn’t have this disadvantage, because they are aware of constraint matches by design,

without any extra domain-specific code.